Episode 5 : The Supply Chain of Values: How War, Energy, and Compute Shape AI Risk

The Supply Chain of Values: How War, Energy, and Compute Shape AI Risk - By Merritt Baer, CSO, Enkrypt AI

Security leaders love to talk about “supply chain risk,” but we usually mean it in a clinically narrow sense: components, vendors, dependencies, firmware, upstream vulnerabilities.

With AI, the supply chain expands—dramatically.

Compute—our era’s critical resource—sits at the intersection of geopolitics, energy, water, labor, and physical manufacturing realities. And each of these has ideological embeddings and perpetualities.

AI is built on global fault lines

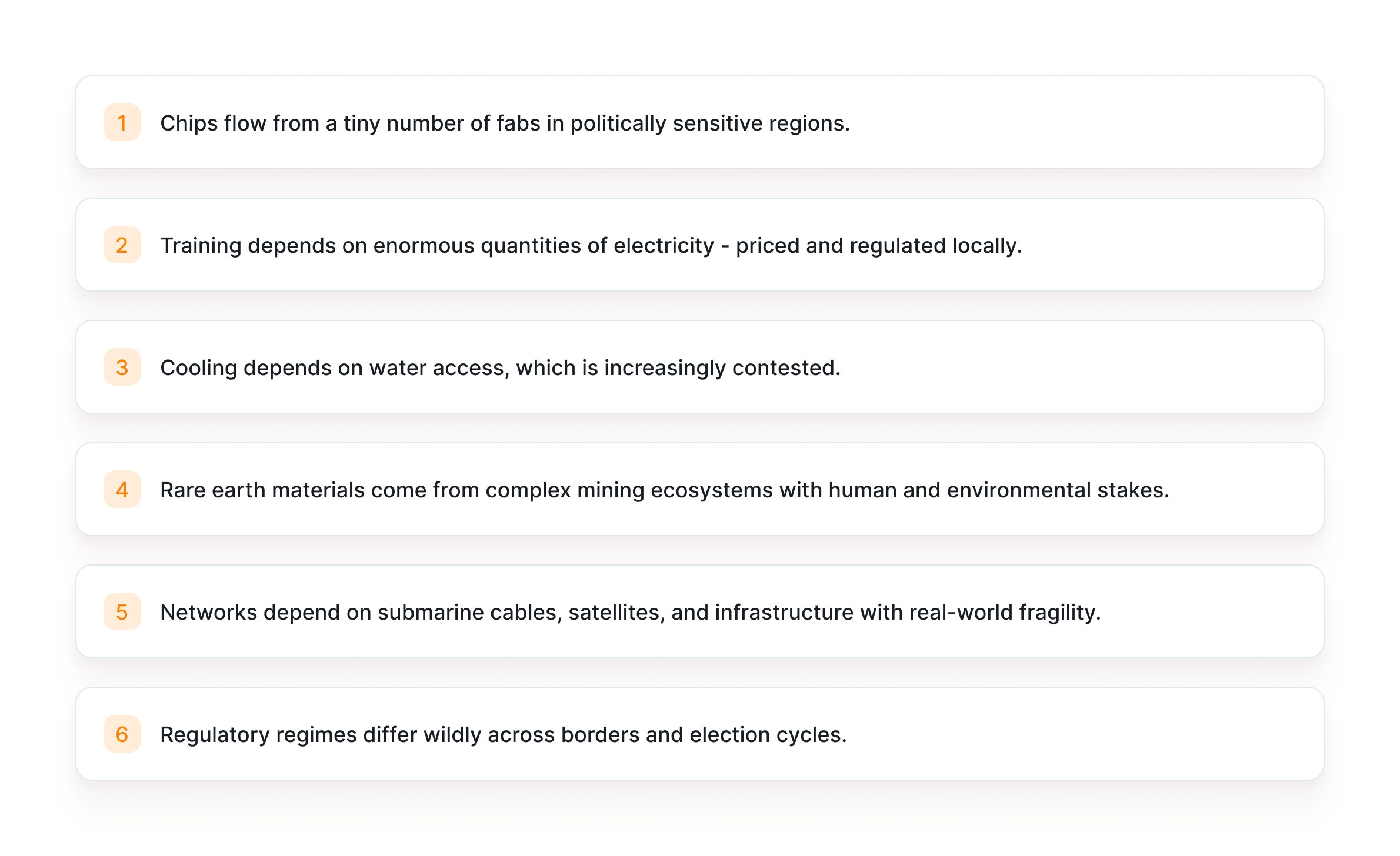

Let’s break down just a few dependencies:

None of this is abstract. But it’s also not controllable in traditional supply chain modes of addressing it.

Why CISOs (quietly) think about war and energy markets

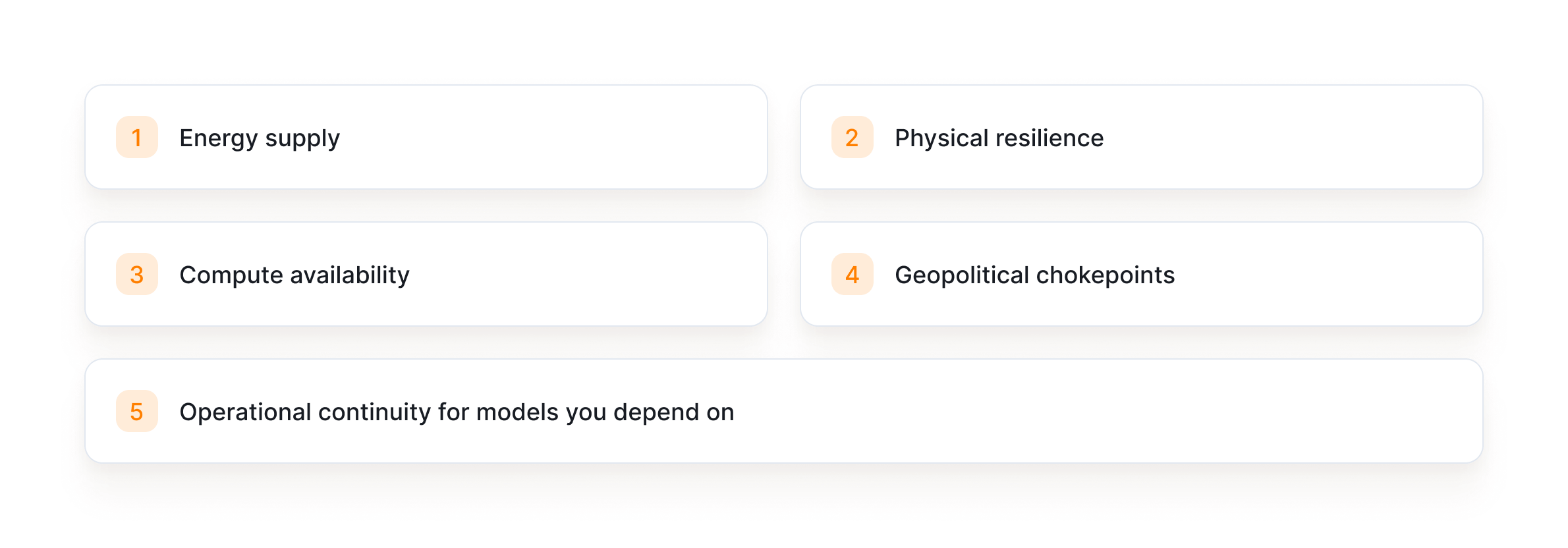

If you’re responsible for AI in production, you are—by definition—responsible for the systems that feed it:

If a conflict disrupts a major chip fab, it doesn’t matter how well you tuned your model—you won’t be able to retrain it.

If energy prices spike, your inference economics change overnight.

If water shortages hit your cloud region, how will you know which pieces of infrastructure will be down?

AI ethics is downstream of infrastructure

We spend a lot of time debating fairness metrics and hallucination rates.

Important, yes.

But fairness doesn’t exist without compute.

Safety doesn’t exist without infrastructure.

And none of this exists without geopolitics cooperating long enough for your model to train.

Values travel downstream through the supply chain.

If the upstream ecosystem is brittle, the downstream ethics will be too.

What this means for security teams

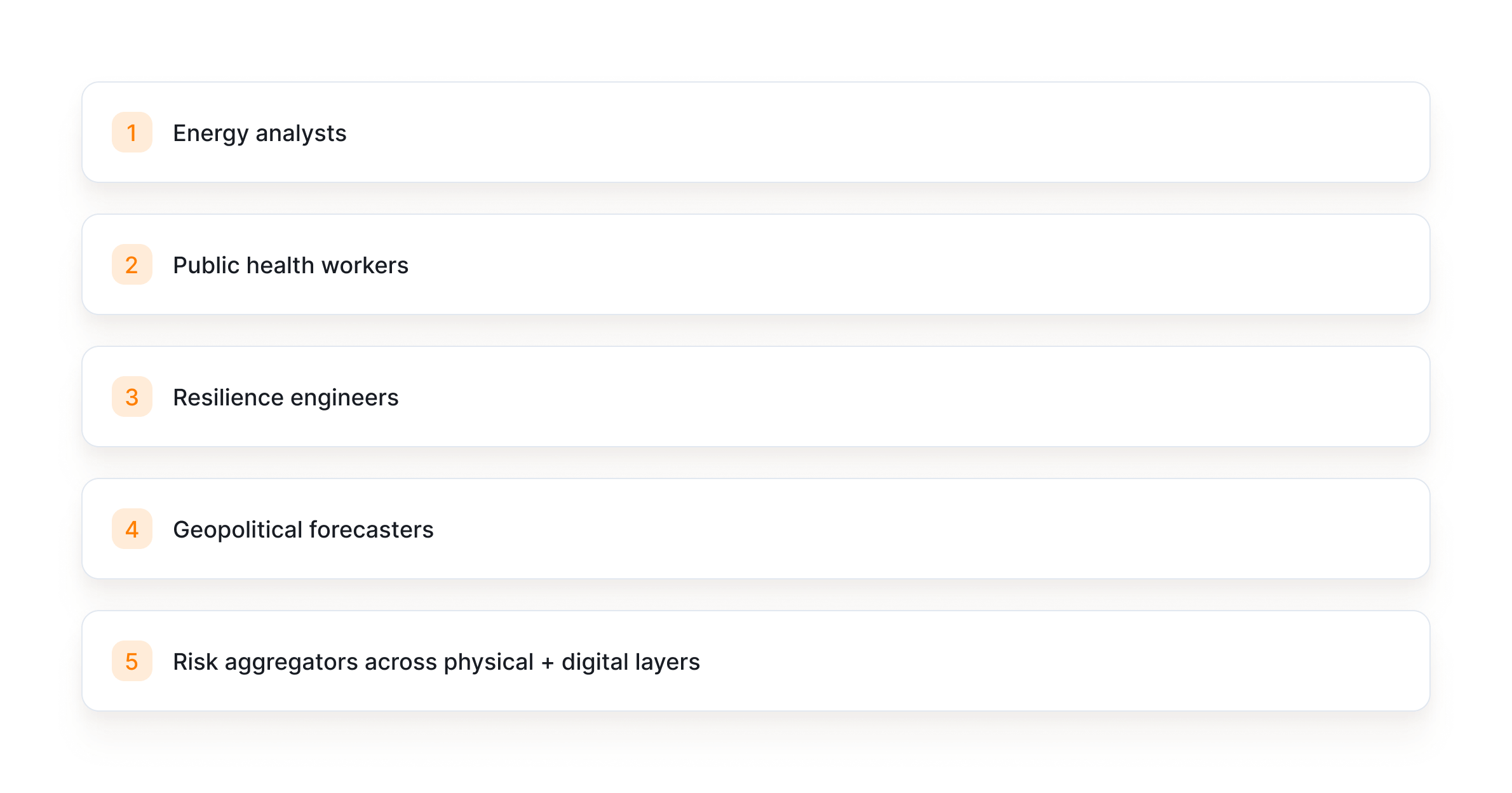

We’re entering a chapter where CISOs need to think like:

AI obligates us to see the whole picture—even the parts we may have little control over in the immediate.

Next in the series

Installment #6 will look at “empathy engineering”—how building humane AI systems means embedding human conscience at the architectural layer, because the models themselves won’t provide it.

.jpg)