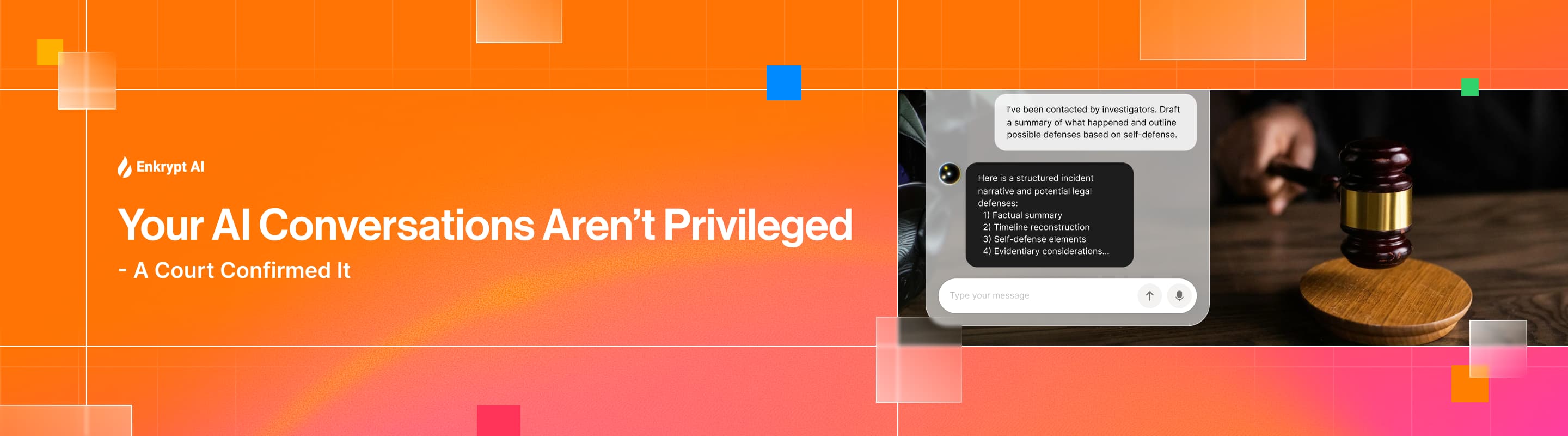

Your AI Conversations Aren’t Privileged - A Court Confirmed It

A recent federal court decision highlighted a risk many organizations have underestimated. Conversations with public AI systems are not protected by attorney client privilege.

In the case, a defendant created documents using an AI tool and later shared them with defense counsel, arguing they should be protected under attorney client privilege and the work product doctrine.

The court disagreed.

.jpg)

The reasoning was straightforward. An AI system is not an attorney. It has no law license, owes no duty of loyalty, and its terms explicitly disclaim any attorney client relationship. Legally, sharing case details with an AI platform is equivalent to sharing them with any third party, and third party disclosure defeats privilege.

Privilege Cannot Be Recreated Later

The defendant attempted to assert privilege after the documents were generated by sending them to counsel. Courts have consistently rejected this argument.

Privilege attaches at the moment of communication. Documents created outside a privileged relationship cannot become privileged retroactively simply because they are later shared with an attorney.

AI did not change this doctrine. It made violations easier to create at scale.

The Hidden Risk: AI Privacy Policies

The court also relied on the platform’s privacy policy, which permitted disclosure of prompts and outputs to governmental authorities.

Attorney client privilege requires a reasonable expectation of confidentiality. When a system reserves the right to access or disclose user content, that expectation no longer exists.

This is where many users and organizations misunderstand AI tools. The conversational interface feels private, but the legal reality depends on data handling, retention, and disclosure rights.

The Illusion of Confidentiality

Generative AI systems encourage users to think out loud. Employees summarize meetings, analyze contracts, and refine legal strategies inside chat interfaces that feel advisory.

From a legal perspective, they are transmitting sensitive information into a third party processing environment that may

.png)

Every prompt can become discoverable material.

A Compounding Legal Problem

The court also noted the user reportedly entered information received from attorneys into the AI system. That creates an additional risk. Attorneys themselves may become fact witnesses if the generated material is introduced in litigation.

AI usage can therefore evolve from a privilege issue into an evidentiary and trial management issue.

What This Means for Enterprises

Organizations should assume employees will use AI to interpret legal, HR, compliance, and contractual issues. Prohibiting usage is ineffective without providing a secure alternative.

The risk is not AI reasoning. It is uncontrolled disclosure.

Companies now need governance controls that address

.png)

The Direction the Industry Is Moving

The future is not eliminating AI use. It is moving AI interactions into controlled environments where confidentiality, retention, and access align with legal privilege requirements.

When AI operates inside governed infrastructure under organizational control, the legal analysis changes. When it operates in consumer tools, disclosure risk persists.

Courts are beginning to clarify what technologists already understood.

AI is not a confidential advisor.

It is an external processor unless proven otherwise.

.avif)

%20(1).png)

.jpg)