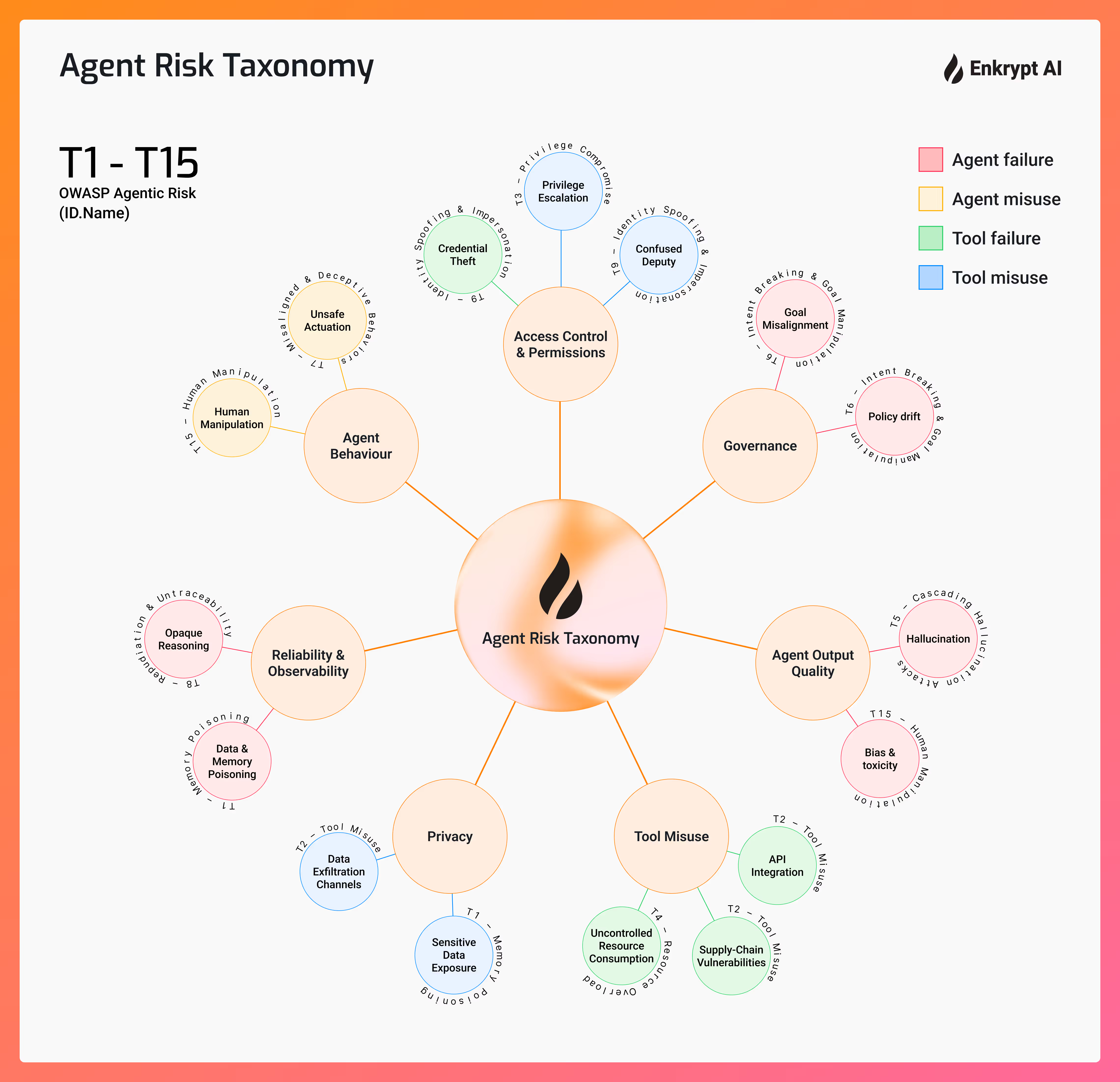

Agent Risk Taxonomy

Stop Agent Chaos. Secure AI Autonomy.

Core Risk Domains

Risk Categories

Framework Mappings

Specific Risk Scenarios

Built on Industry Standards

.avif)

OWASP Agentic AI

15/15 threat IDs covered (T1–T15)

MITRE ATLAS

Live tactics & techniques like AML.T0053 (Plugin Compromise)

EU AI Act

Direct references to Articles 9, 10, 14 + Annex III.

NIST AI RMF

Each risk slotted into Govern → Map → Measure → Manage

ISO 42001 / 24028

Governance & trustworthiness clauses cross-linked.

Agent Risk Taxonomy

Theft

& Permission

T1 - T15

Mappings with existing frameworks

We mapped the agent risks with existing frameworks like OWASP, NIST, EU AI Act etc.

Frequently Asked Questions

We focus specifically on autonomous agents that use tools—not traditional ML models. The risks are fundamentally different.

Any AI system that can invoke external APIs, make decisions autonomously, or interact with tools. Technology-agnostic.

Unlike traditional frameworks that focus on ML model security, our taxonomy specifically addresses the unique risks of agentic AI systems that can take autonomous actions and interact with external tools.

Yes, the taxonomy covers single-model agents, multi-agent systems, and any AI system that can invoke external tools or APIs autonomously. It's designed to be technology-agnostic.

Yes! We welcome input from security practitioners. Contact us about our contributor program.