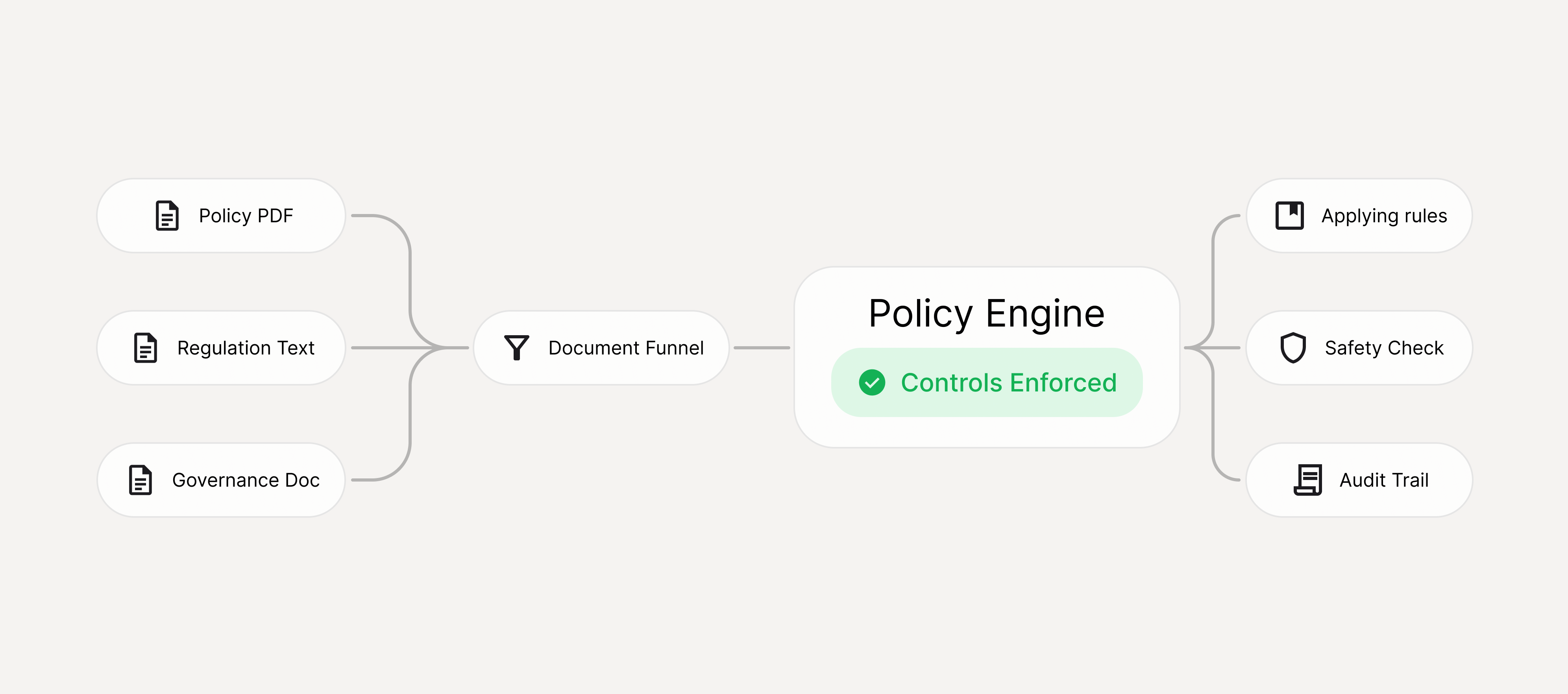

Turn AI governance and regulation into enforceable controls

Enkrypt AI Policy Engine converts natural-language AI governance policy and regulatory text (including PDFs) into controls - then enforces those controls across the platform with traceability you can audit.

What you get

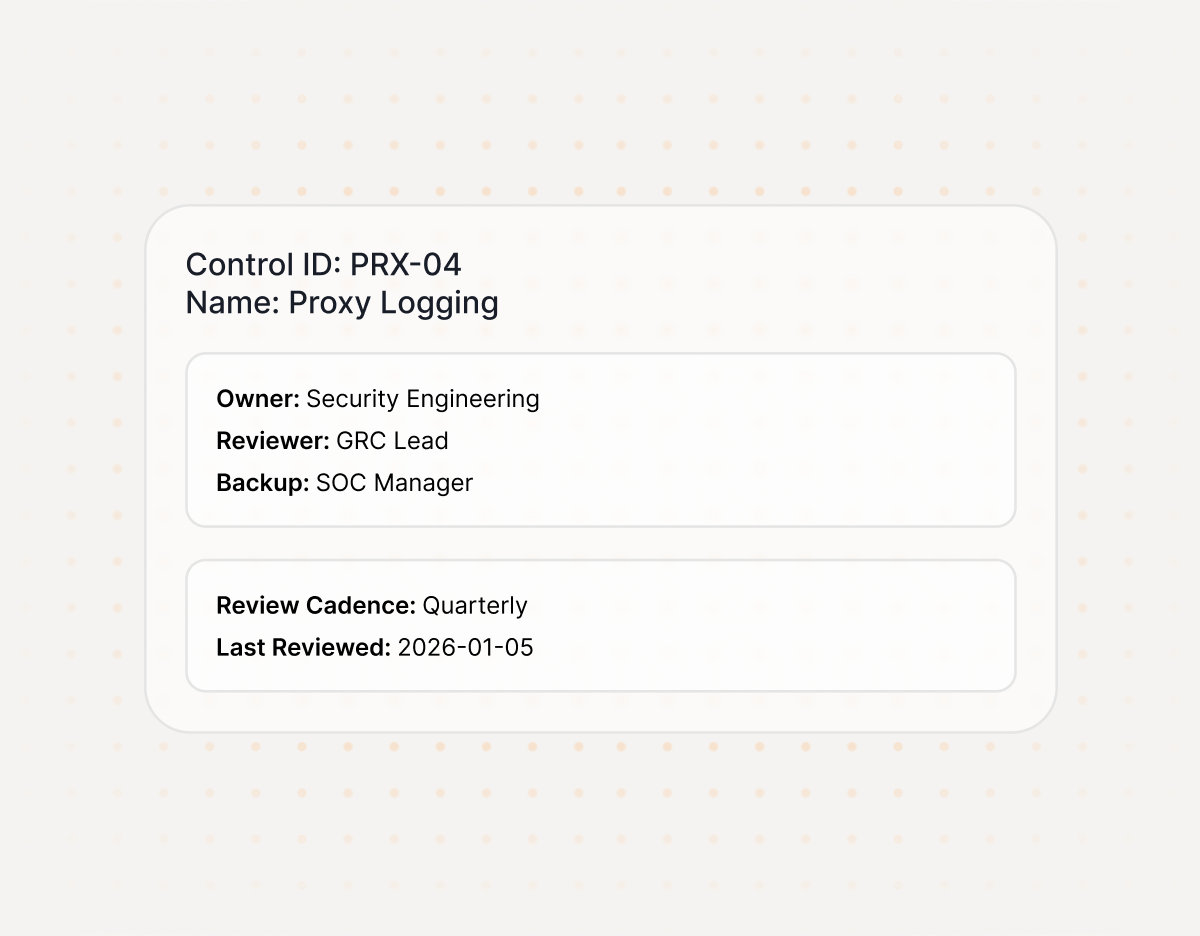

Control Catalog

Control IDs, descriptions, owners, scope, environment

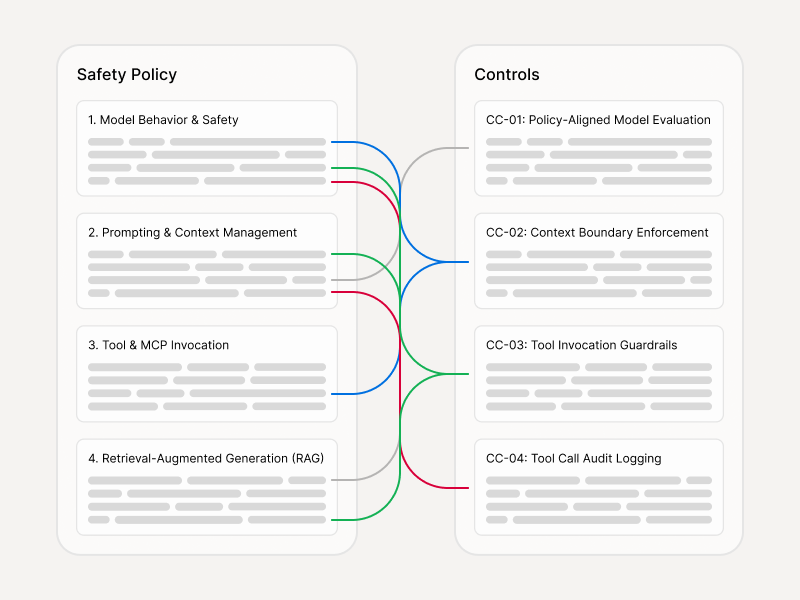

Control Matrix

Requirement/clause → Control → Enforcement point → Evidence

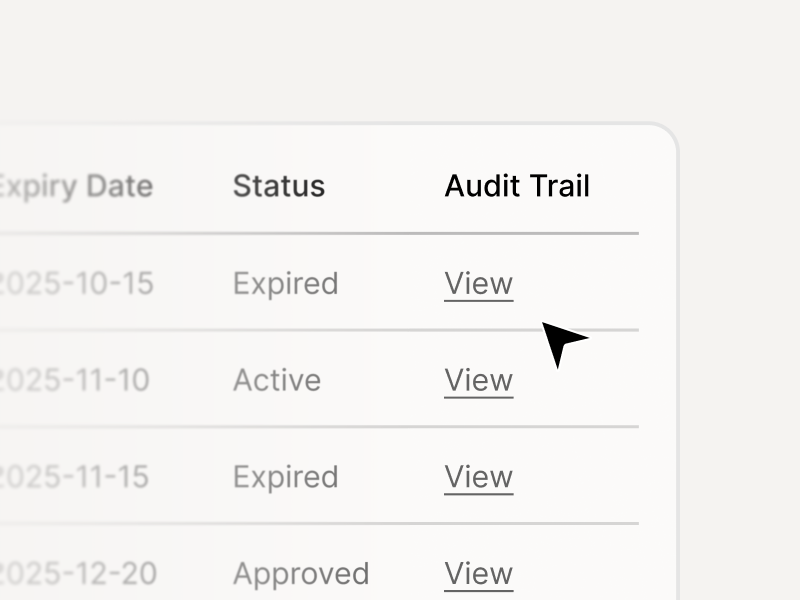

Version history

Approvals, change diffs, rollback, exceptions/waivers

Exports

CSV/JSON/PDF packages for governance workflows

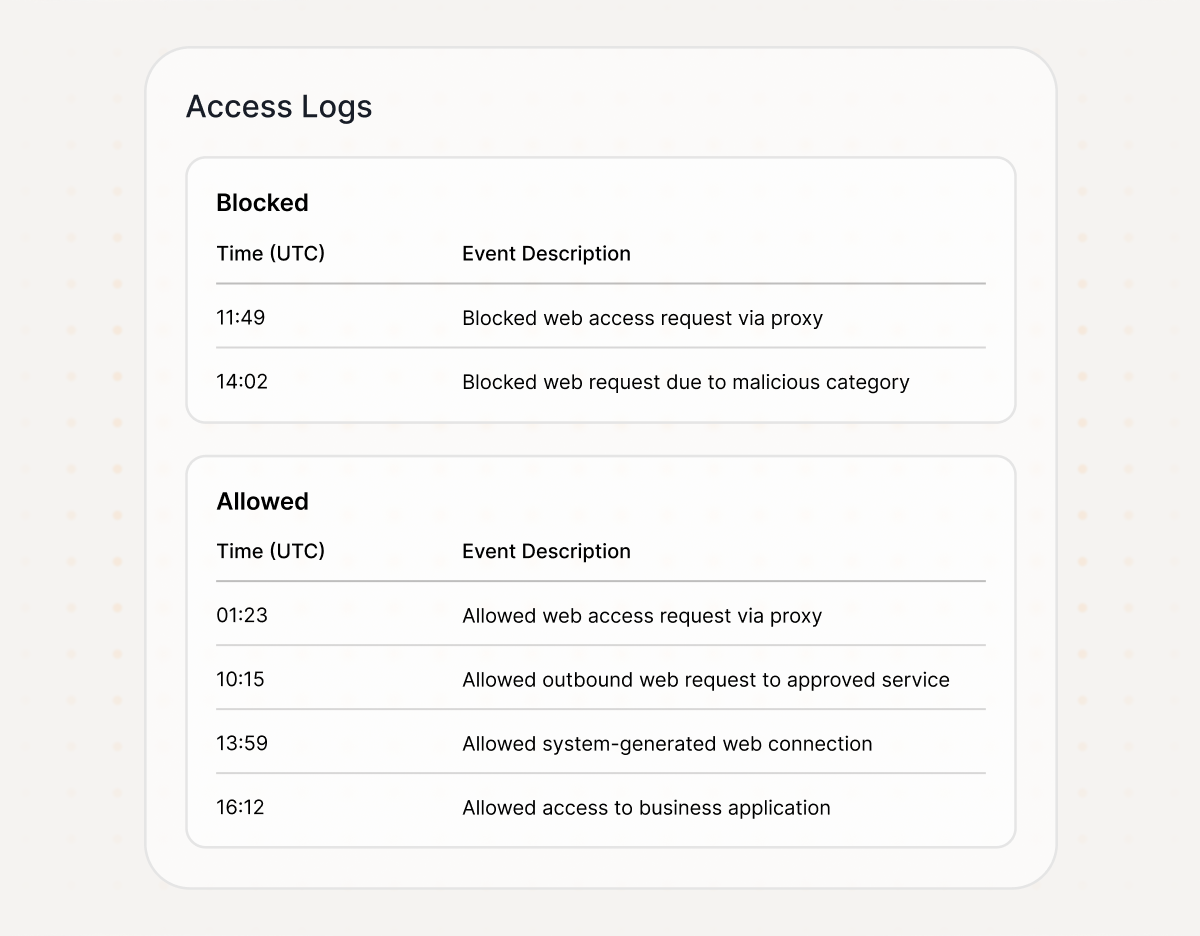

Coverage view

What’s tested (Red Teaming) and enforced (Guardrails/Gateway)

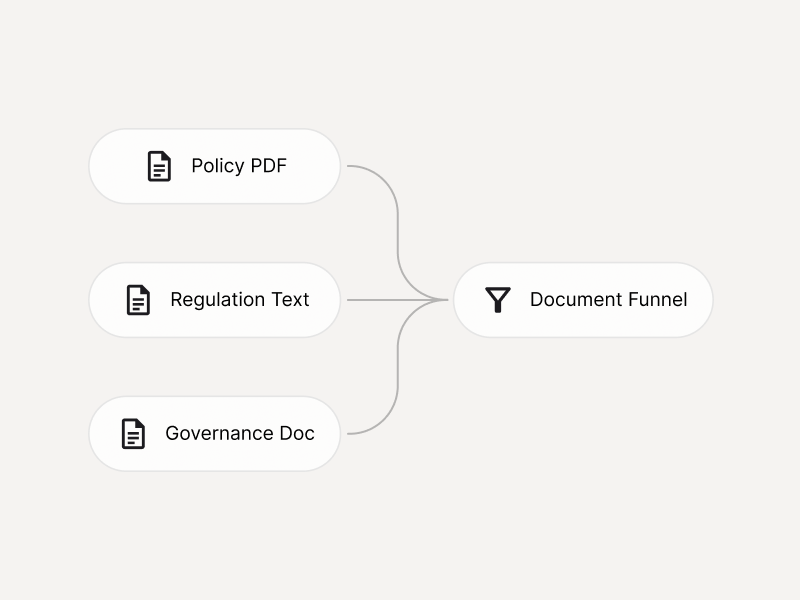

How policy → controls works

Ingest policy text or regulation PDFs

- Prompt injection (direct + indirect) across text/audio/vision

- Tool misuse, privilege escalation, unsafe actions

- Data exfiltration, secrets leakage, connector abuse

Generate control candidates with clause-level traceability

- Jailbreaks and refusal bypass

- Disallowed content, toxicity, brand-risk outputs

- Policy violations (tone, escalation, restricted topics)

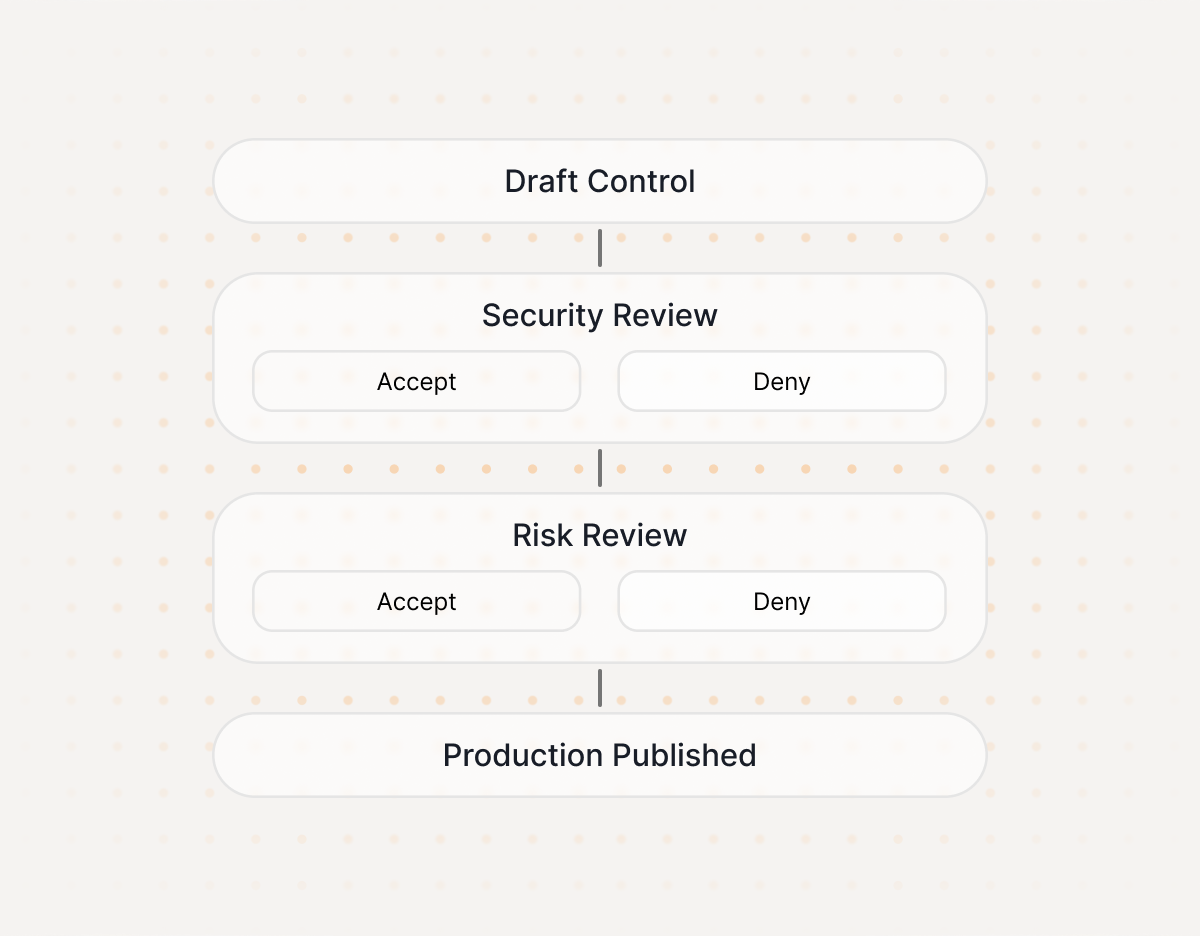

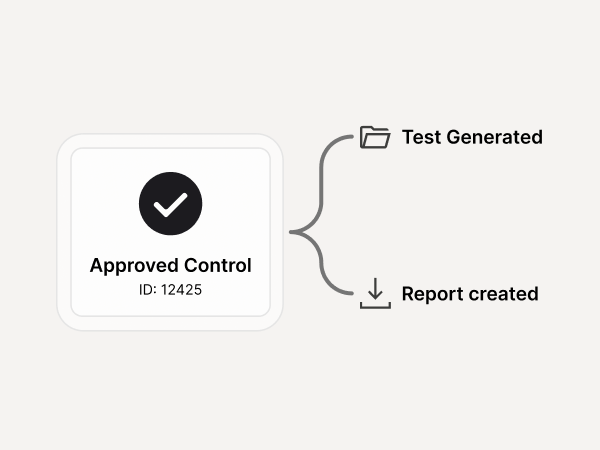

Review + approve controls, then publish to environments (dev/stage/prod)

- PII/PHI/PCI handling failures

- Data minimization and retention violations

- Evidence generation for internal controls and audits

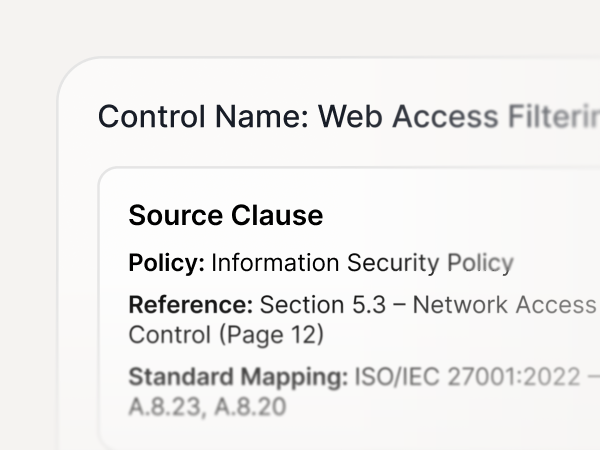

Traceability by default

Every control links back to

The source clause

Document, section/page reference

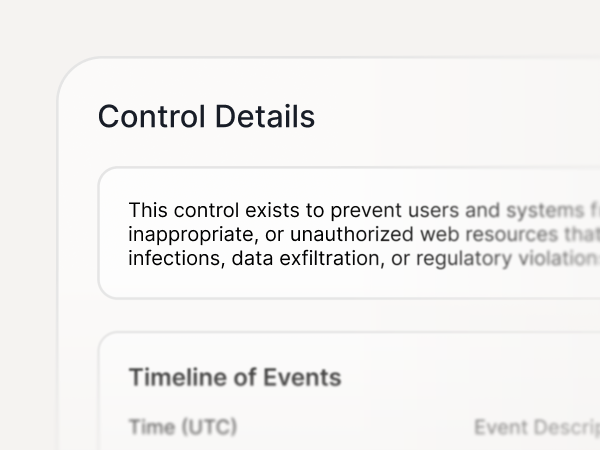

The rationale

Why this control was triggered

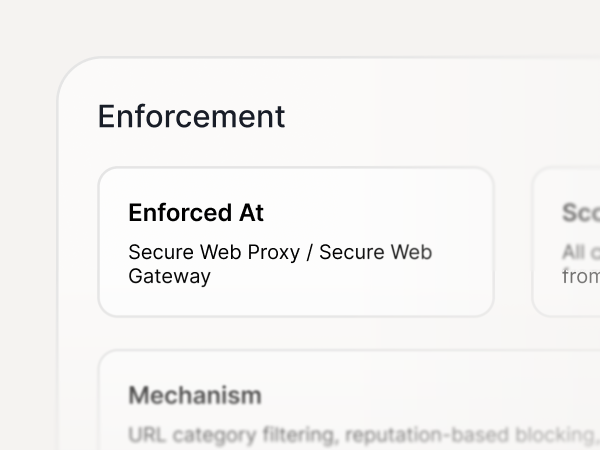

The enforcement

Where it’s applied

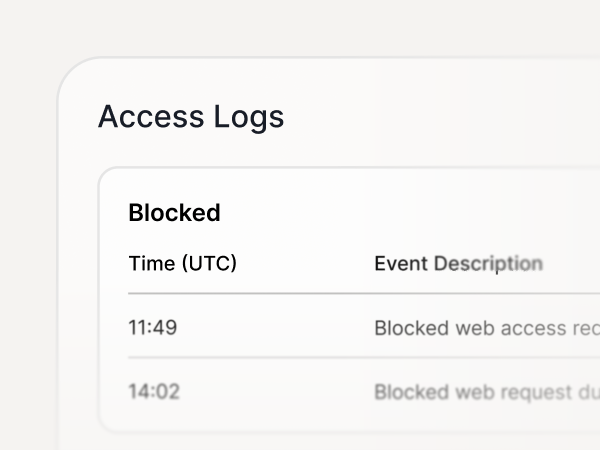

The evidence

What proves it ran

Control lifecycle and governance

Built for real governance - not a one-time document:

Integrations

Operationalize governance where teams work

Workflows

Jira / ServiceNow

Security

Splunk / Sentinel / Datadog + webhooks

GRC / Exports

Attach evidence and control matrices to requests.

Built for speed and scrutiny

Low-latency evaluation for inline enforcement

Deeper explanation for audit-grade reviews

Minimal necessary context captured, with role-based access

OWASP, NIST, EU AI Act included as defaults

Generate a policy for your organization

Get Started with API in minutes

from enkryptai_sdk import policy_client

policy_text = """

The assistant must not

provide medical advice.

The assistant must not

promote violence or hate speech.

The assistant must

protect user privacy at all times.

"""

policy_client.upload(

name="enterprise_healthcare_security_policy",

policy_text=policy_text

)

Sits at the center of the platform

Red Teaming

Generate tests from controls and track closure

Guardrails / MCP Gateway

Enforce controls inline

Monitoring

Observe outcomes and drift over time

Compliance

Export mappings and evidence packages

Frequently Asked Questions

What does the Policy Engine do?

Converts natural language governance policy and regulation PDFs into structured controls you can enforce and audit.

What are the outputs?

- Control set with control_id and mappings to your source policy/regulation

- Versioned policy pack that can be enforced by Guardrails and monitored in production

Is it explainable?

Yes - each control links back to source text and includes rationale so teams can review and approve changes.

How does this map to Guardrails?

Yes - each control links back to source text and includes rationale so teams can review and approve changes.

Can we customize policies to our brand and risk tolerance?

Yes - tone, escalation paths, restricted topics, and sensitive-action rules can be tuned and versioned.

How do teams govern changes?

Policies are versioned; you can review diffs, stage rollouts, and roll back safely.

Does it support multiple regulations and internal standards?

Yes - combine internal governance requirements with external standards into a single control model.