Generative AI Security:

The Shared Responsibility Framework

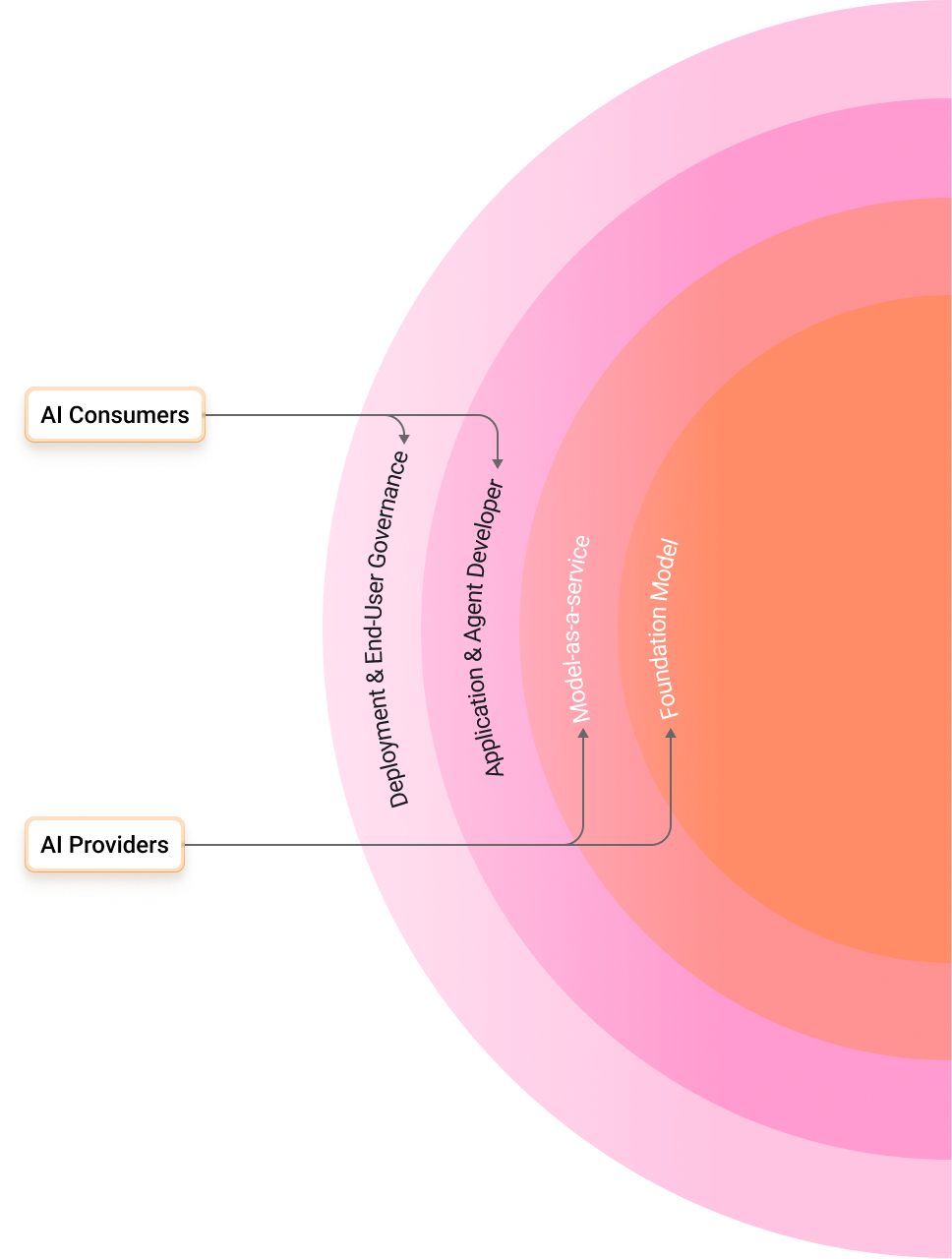

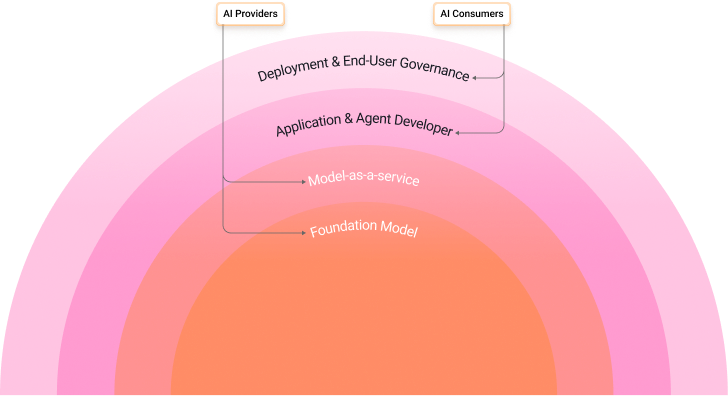

The Four Layers of Gen AI Responsibility

Generative AI brings new risks and opportunities, making it essential to clearly define responsibilities. Just as cloud providers secure infrastructure while customers manage applications, Gen AI requires its own layered approach. By assigning ownership across four layers, organizations can adopt AI with confidence, ensuring safety, compliance, and measurable value.

AI Providers (Layers 1 & 2): Manage the foundation model and APIs, including safe training data, initial alignment, infrastructure hardening, and baseline content filters.

AI Consumers (Layers 3 & 4): Govern how AI is applied, with responsibilities for fine-tuning models, building domain-specific guardrails, monitoring outputs, enforcing compliance, and training users.

Continuous Feedback Loops: Keeping Everything Aligned

Provider

Developer

pipelines

Organization

Users

9 Questions Every CISO Should Ask Their AI Vendors

Training Data & Alignment

Model Security

API & Infrastructure

Versioning

Data Privacy

Guardrails

Agent Security

Monitoring & Transparency

Regulatory Compliance

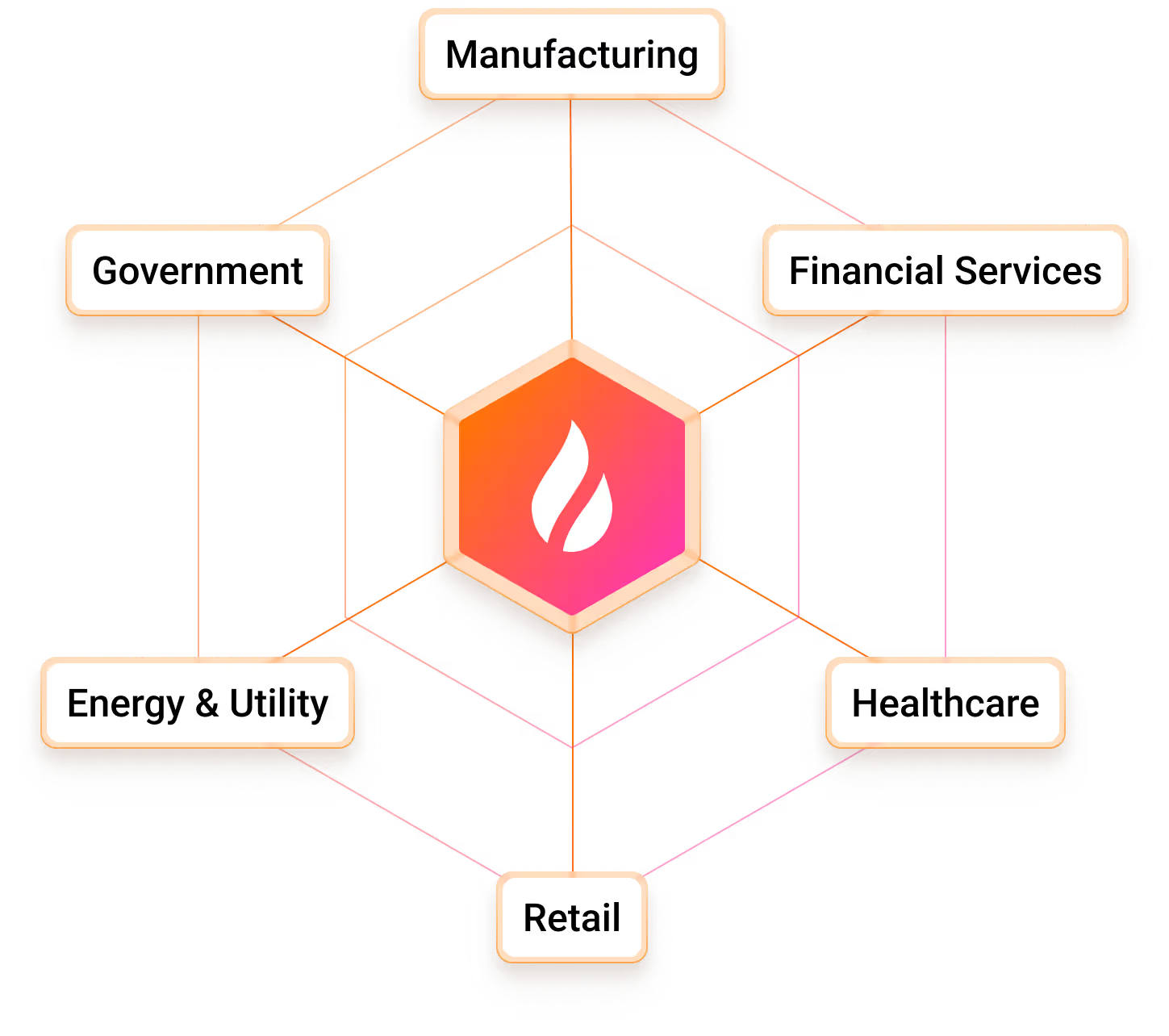

Case Studies

Contributors

Rajendra Gangavarapu

Amanda Hartle

Inderpreet Kambo

Jagadeesh Kunda

Rock Lambros

Sunil Mallik

Sekhar Sarukkai

Nishil Shah

Tara Steele

Aditya Thadani

Abhishek Trigunait

Dennis Xu

Get Guidance on Shared Responsibility

The AI Shared Responsibility Framework helps CISOs and enterprise security leaders align accountability across providers, developers, and compliance teams. Learn how to operationalize AI governance, enforce real-time guardrails, and measure what truly matters—outcomes, not layers.

Read more on our blog