Continuously break your Agents - before attackers do

Enkrypt AI Red Teaming finds real failure modes across text, audio, and vision—including agents, tools, RAG, and MCP—and turns them into prioritized fixes and evidence-ready reports for security, risk, and compliance.

What you can red team

Test what actually ships - not just the model.

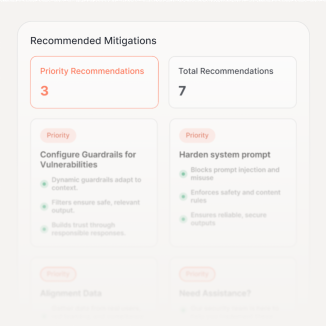

What you get

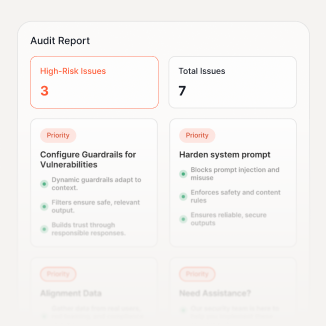

Red Team Report

Executive summary, top risks, and system-level recommendations

Findings Register

Severity, surface, reproduction steps, and suggested fixes

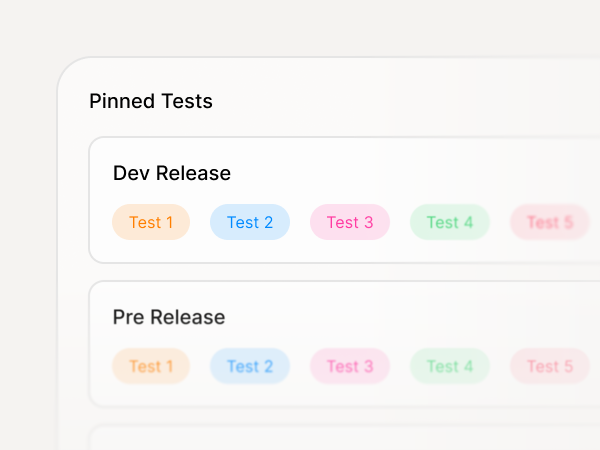

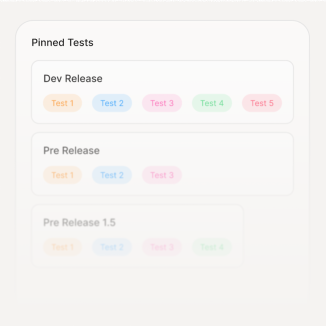

Regression Suite

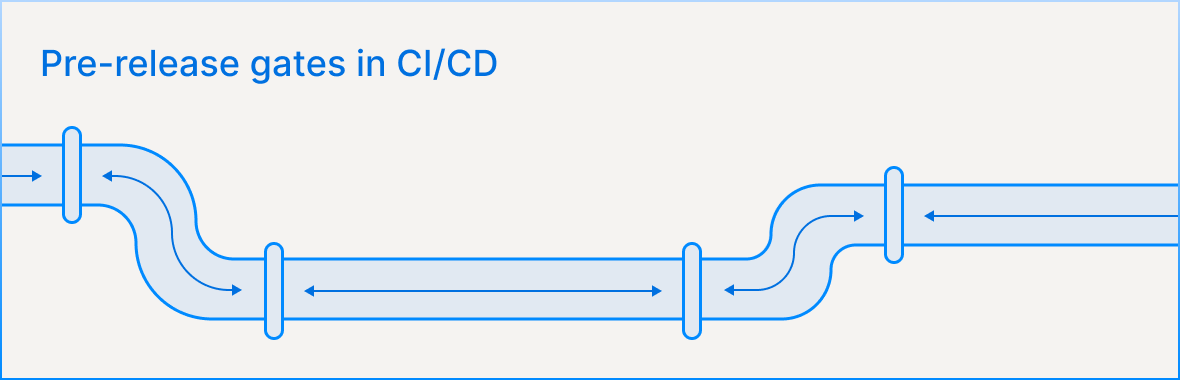

Pinned tests you can run in CI before each release

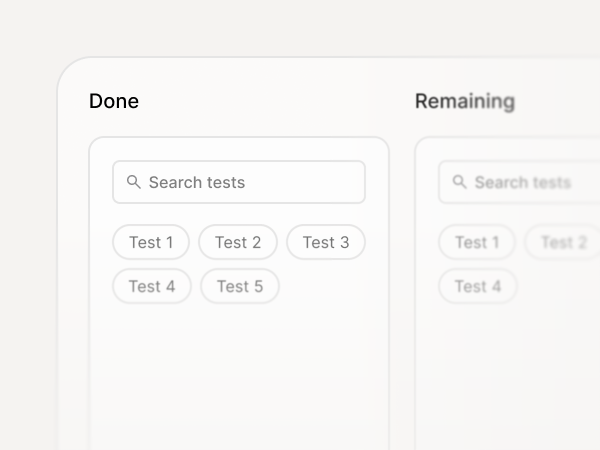

Coverage Map

What was tested (agents, RAG, tools, modalities, languages) and what remains

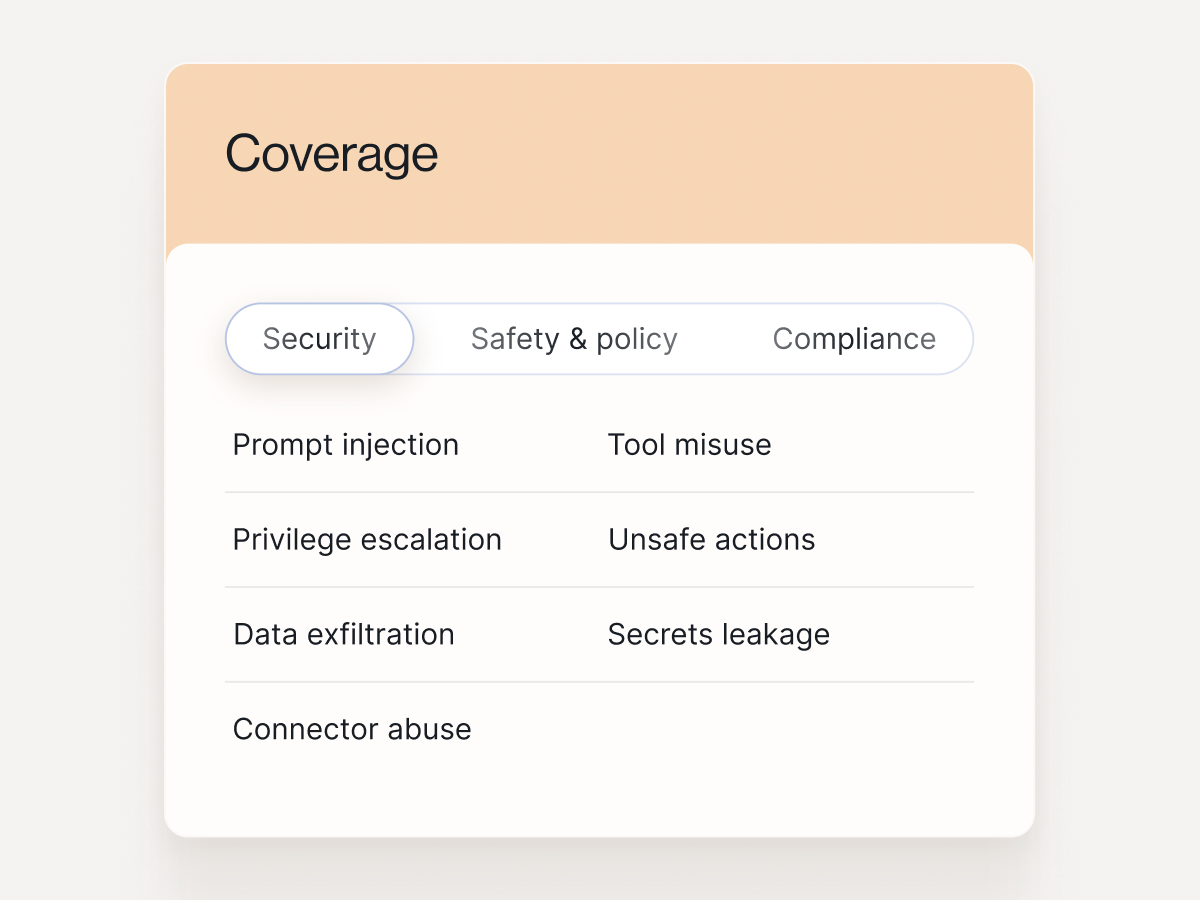

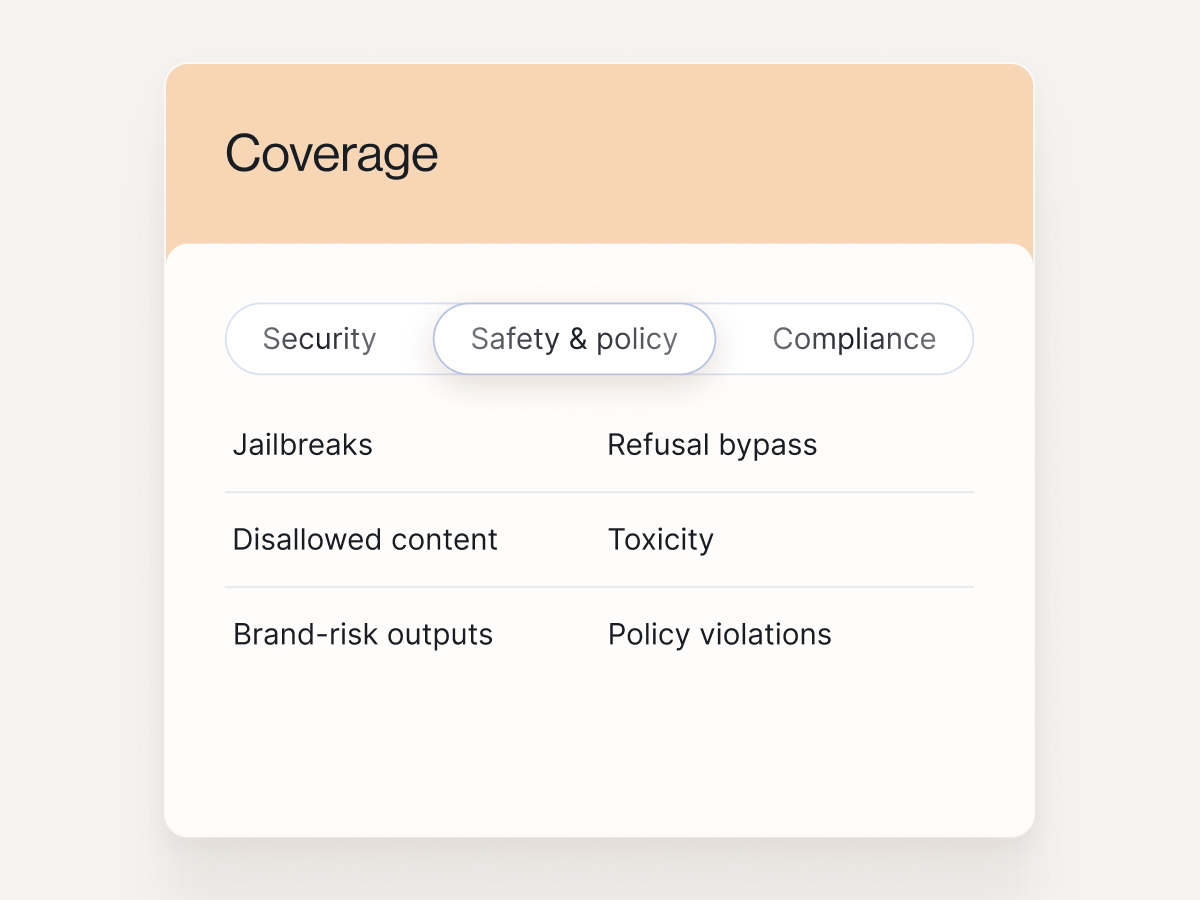

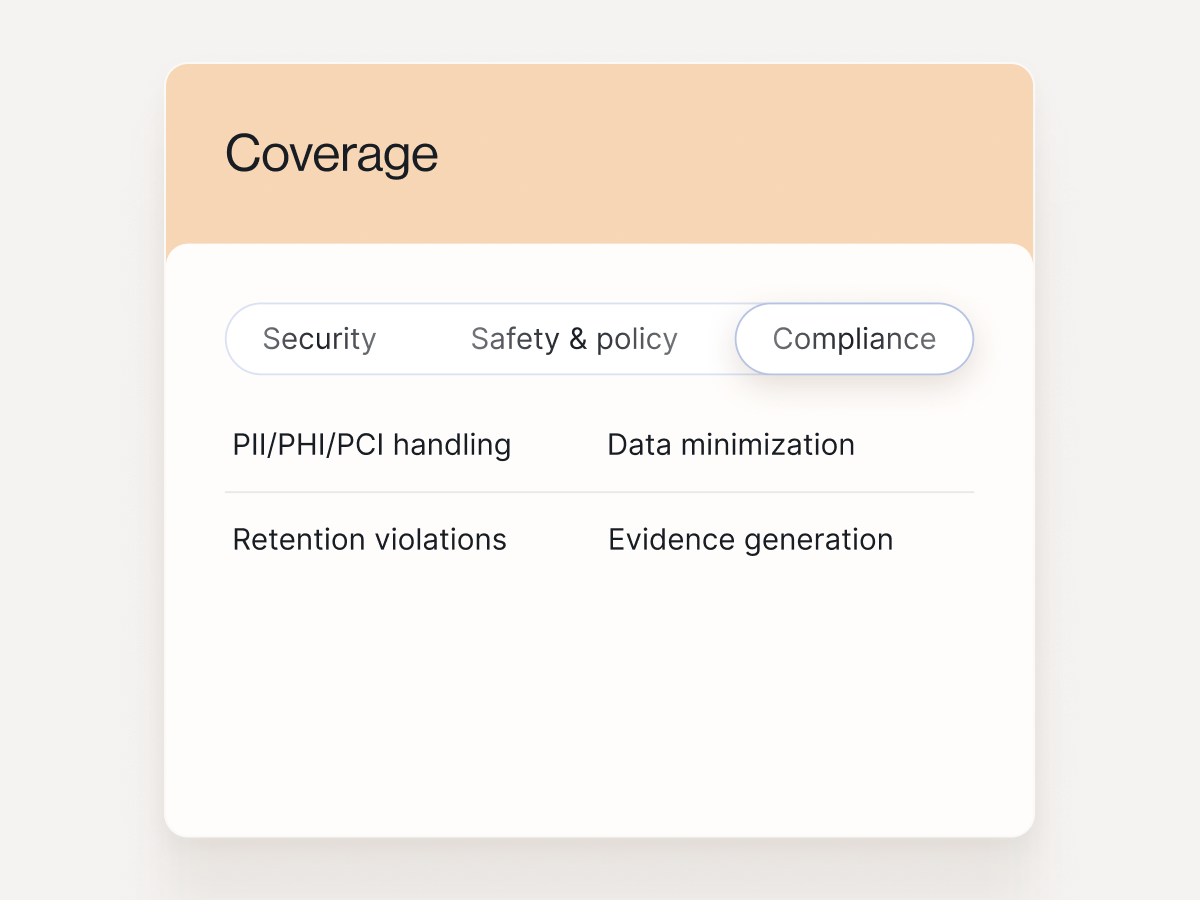

Coverage that maps to real risk

Security

See capabilities

Safety & policy

See capabilities

Compliance

See capabilities

Red Team top models

Choose model

gpt-5.2

claude-3-opus-20240229

gpt-5-nano

claude-3-5-sonnet-20241022

gpt-5-mini

gpt-5-O

Explore more models

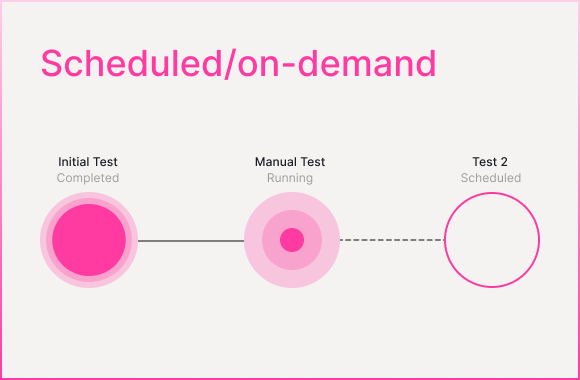

Built for production velocity

Run red teaming where you build:

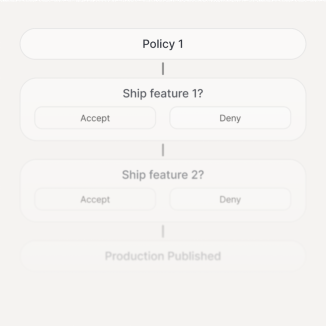

Pre-release gates in CI/CD

Scheduled and on-demand testing in staging and production

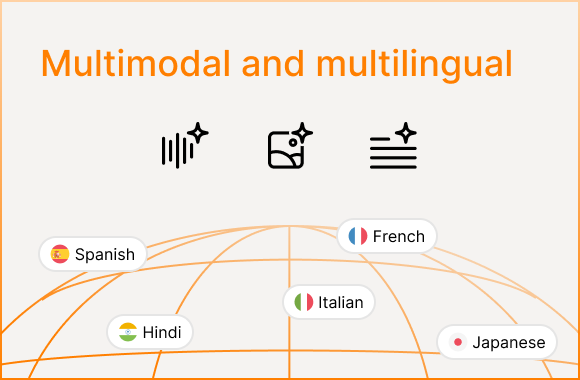

Red team multimodal and multilingual agents

Compliance Mapping (NIST, OWASP, EU AI Act)

Get Started with API in minutes

pip install enkryptai-sdk

from enkryptai_sdk import redteam_client,

RedTeamConfig

redteam_task = redteam_client.add_custom_task(

config=RedTeamConfig

)

# TASK SUBMITTED!

Go to app.enkryptai.com/redteam to view results

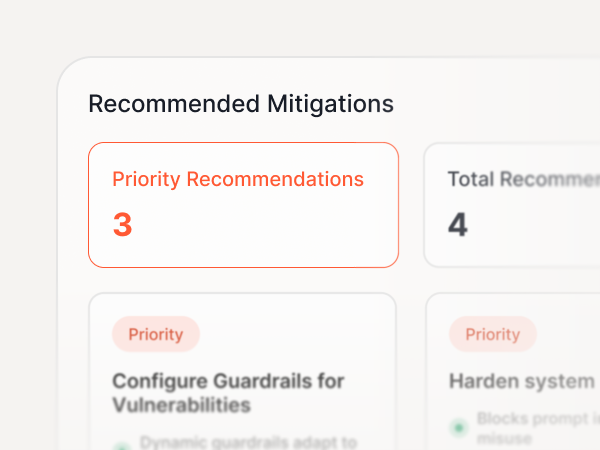

Outputs teams actually use

Regression suites to prevent repeat failures

Clear repro steps and remediation guidance

Ship / no-ship decisions tied to policy and risk

Evidence trails for governance, audits and investigations

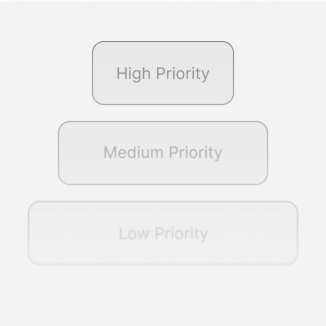

Prioritized vulnerabilities with severity and exploitability context

Exports to tickets, SIEM, and GRC workflows

Frequently Asked Questions

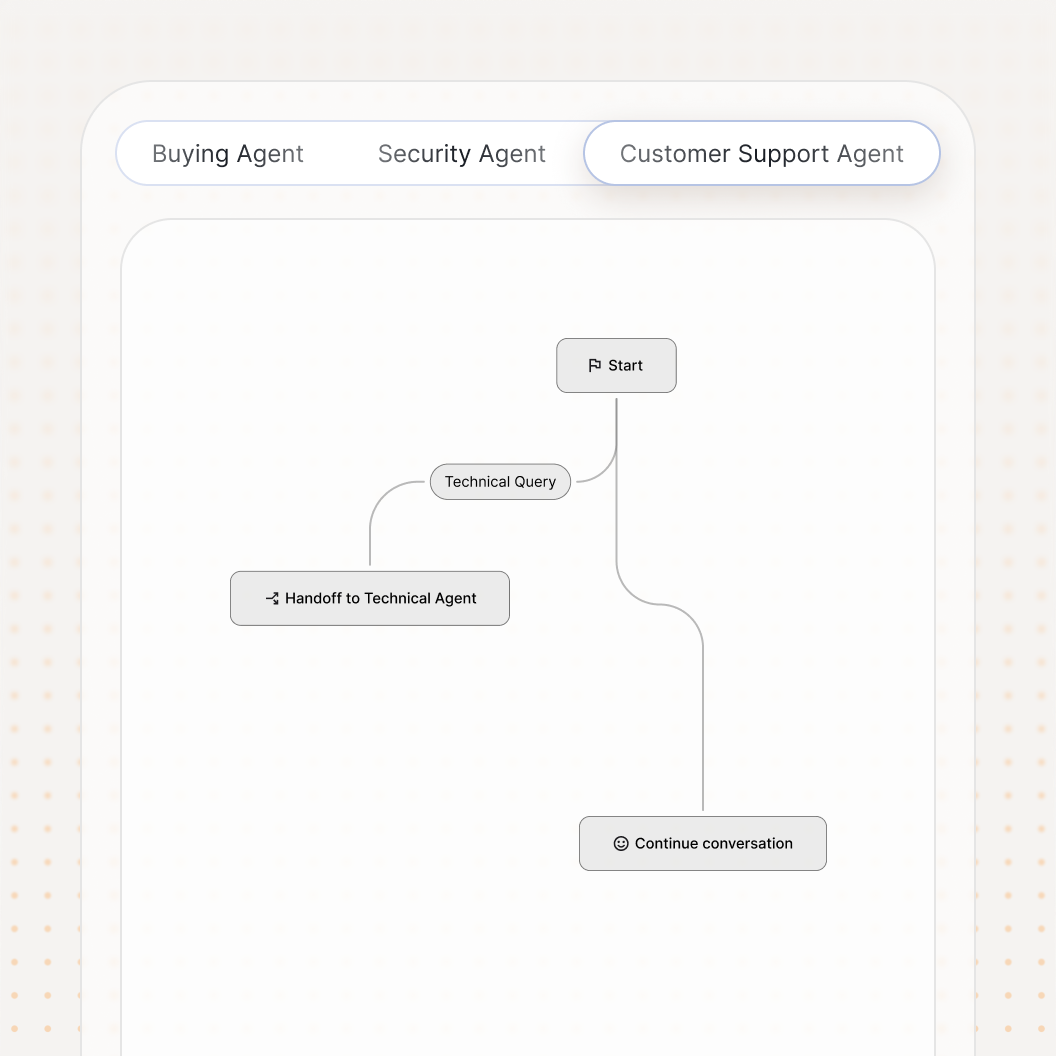

Do you cover “agentic” failures beyond prompt injection?

- Agent goal hijack (objective redirection mid-task)

- Rogue agents (loops/retries/autonomy drift outside intended behavior)

- Cascading failures (one weak link triggers unsafe downstream actions)

- Insecure inter-agent communication (unsafe delegation, message injection, context leakage)

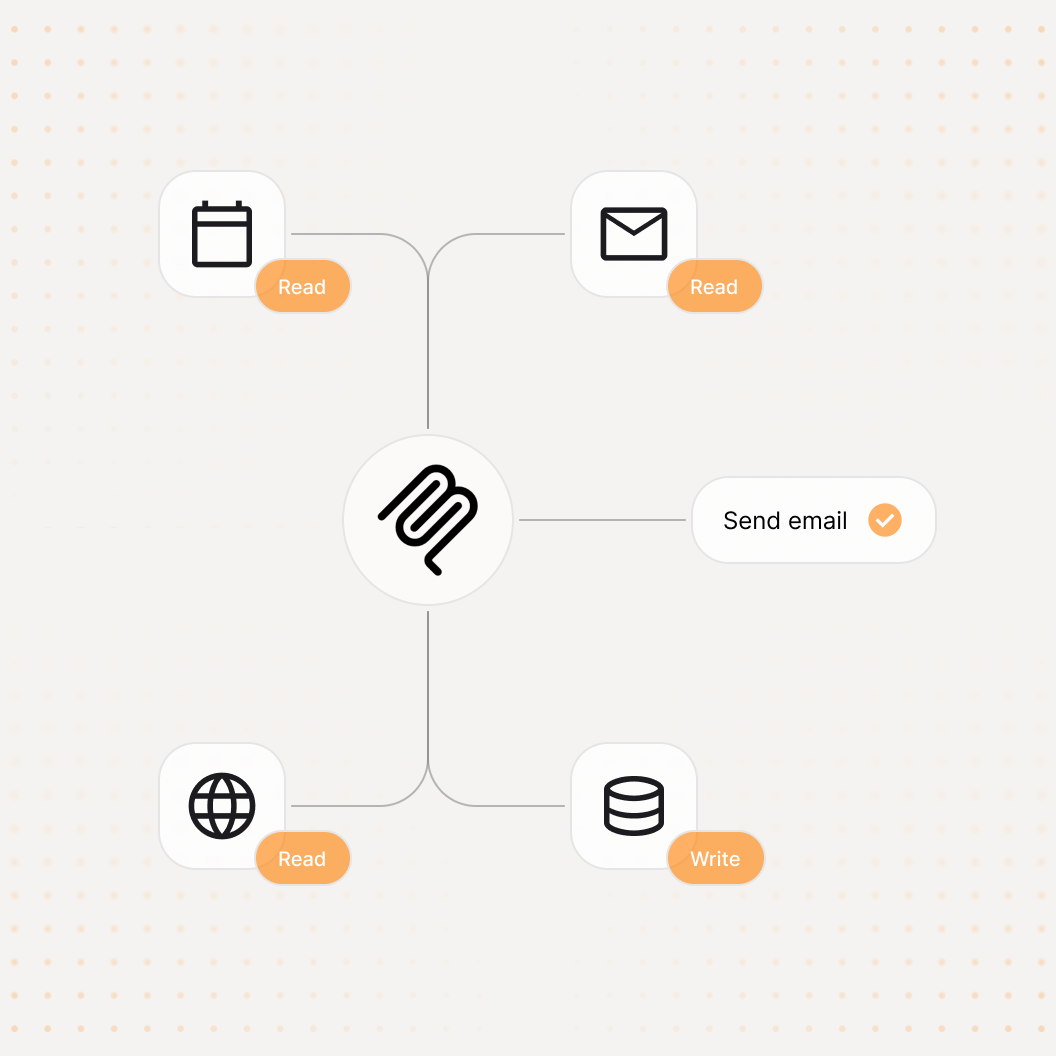

What does “tool misuse” include?

- Unsafe tool calls, unintended tool execution, and over-broad permissions

- Dangerous actions (e.g., sending data externally, modifying records)

- Connector abuse and tool-output prompt injection

How do you test identity and privilege abuse?

- Role bypass attempts, tenant crossover attempts, and privilege escalation

- Policy enforcement by role/tenant/context (where identity is available)

Do you cover supply-chain risk in agent tool ecosystems?

- Agentic supply chain vulnerabilities (untrusted MCP servers/tools, poisoned tool catalogs, unsafe dependencies)

- Allowlist/denylist recommendations and least-privilege checks

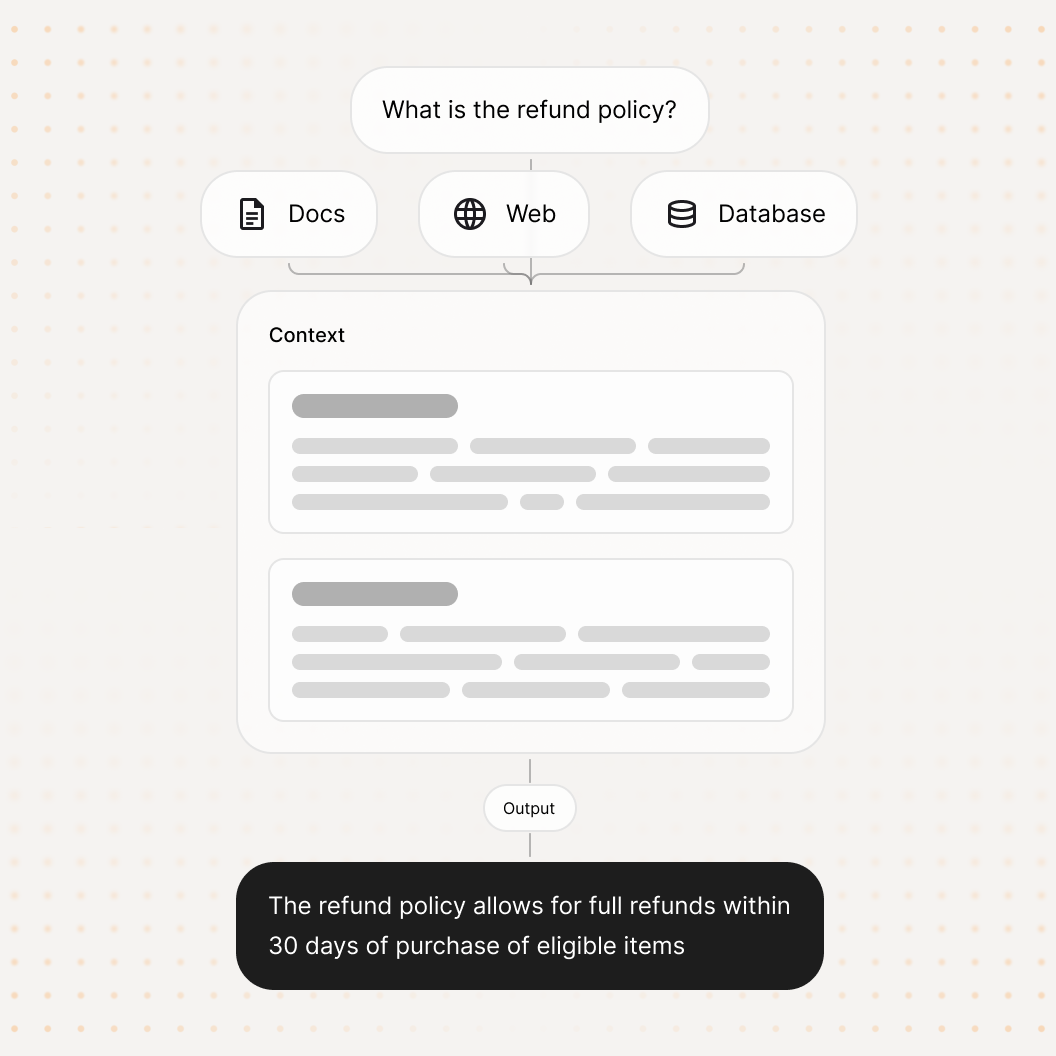

Do you test poisoning attacks?

- Memory poisoning (persistent steering via long-term memory / vector stores)

- Retrieval poisoning (RAG sources/web results that manipulate outputs or actions)

Is multimodality included?

- Text, vision, and audio testing

- Image+text prompt smuggling (overlays/hidden instructions)

- Audio injection/transcription manipulation

- Cross-modal chains (image→text→tool, audio→text→tool)