LLM Fine-Tuning: The Risks and Potential Rewards

What is LLM Fine-Tuning?

Large language models are exceptionally good generalists but fail to produce satisfactory results for domain specific use cases. Few such use cases are detecting cybersecurity threats, medical research, analysis of financial information and legal agents. With fine-tuning, one can leverage the full potential of Large Language Models for domain specific use cases. In this process, we provide the use case specific data to perform fine-tuning to accommodate the information to the LLM.

Benefits of LLM Fine-Tuning

- Fine-tuning can significantly enhance a model's performance in specialized areas by adapting it to understand the nuances and terminology of that field.

- Fine-tuning also allows for more precise control over the model’s behavior, helping to mitigate issues like bias, hallucinations, or generating content that does not align with your requirements.

- Fine-tuning always works better than RAG systems when you need a specialized, self-contained model with high performance on a specific task or domain.

Adversarial Impacts of LLM Fine-Tuning

While fine-tuning has a substantial number of benefits, it also comes with its own set of drawbacks. A known drawback is that fine-tuning is expensive. But the story does not end here. Our tests have found that fine-tuning increases the risks associated with a Large Language Model like Jailbreaking, Bias, Toxicity by 1.5x [Figure 1].

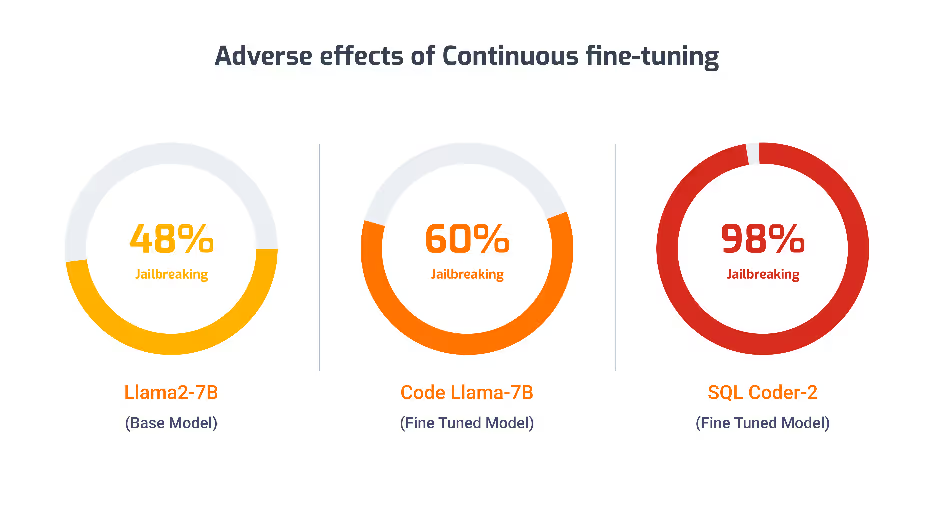

Furthermore, if the fine-tuning process is continued, the risk increases so much that the model gets jailbroken on every malicious prompt [Figure 2].

Why do the Risks Increase with Fine-Tuning?

There are some theories that explore why risk increases. A model undergoes safety alignment during the training process where the model is taught `how to say no` to malicious queries. Internally, the alignment process changes the model weights. When a model is fine-tuned, the model weights are changed further to answer domain specific queries. This causes the model to forget its safety training leading it to respond poorly. Increased risk due to fine-tuning is also an active area of research in academia as well as industry.

Conclusion

While fine-tuning can enhance the model performance, it also amplifies risks in the model. It becomes crucial to address these risks by using either Safety Alignment or Guardrails. For more details on how we derived these numbers, check out the paper our team published [1].

LLM Fine-Tuning Video

Watch this 1.30 min video that highlights the variety of risks associated with LLM fine-tuning.

References

[1] Divyanshu Kumar, Anurakt Kumar, Sahil Agarwal, Prashanth Harshangi. Fine-Tuning, Quantization, and LLMs: Navigating Unintended Outcomes arXiv, July 2024.

%20(1).png)