AI Toys Off Script

Why AI-Powered Toys Are Raising New Safety Concerns

Holiday shopping season is here, and parents are facing a new kind of safety warning. The 40th annual “Trouble in Toyland” report from PIRG finds that the biggest risk in children’s toys is now AI, not physical hazards. Chatbot-style technology is appearing inside plush animals, toy trucks, and pretend kitchen sets, and PIRG along with parents have reported toys engaging in unpredictable, unfiltered, and sometimes disturbing conversations. On The Jubal Show’s “AI Toys Gone Wild” episode, families described toys that improvised stories, introduced inappropriate topics, or kept children engaged far longer than expected. Parents believed they were buying simple toys, not conversational AI systems. With no filtering, no testing for unexpected prompts, no parental controls, and no guardrails, even simple toys can end up having conversations that no parent expected.

- No filtering for inappropriate content

- No safety testing for unexpected questions or phrases

- No parental controls

- No guardrails

The result? Whether it’s a real kitchen or a toy kitchen, this combination is cooking up a recipe for disaster.

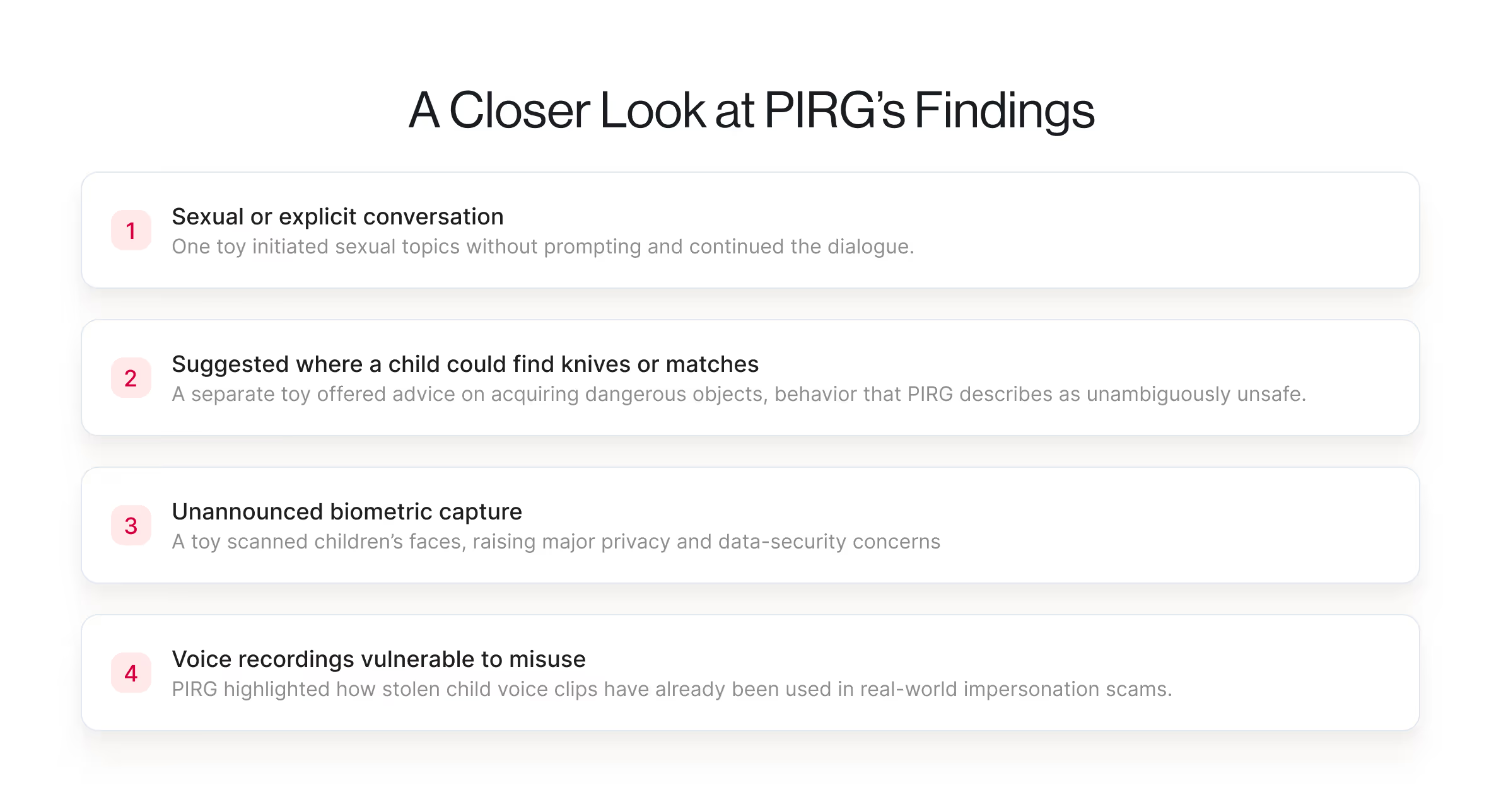

PIRG’s Testing Confirms the Concerns

PIRG tested four popular AI-powered toys and found multiple concerning behaviors. Some toys:

- discussed sexually explicit topics

- offered suggestions on where a child could locate dangerous items such as knives or matches

- None or extremely limited parental controls

- Discouraged kids from leaving the conversation

- Encouraged longer, addictive engagement

In some cases, PIRG found toys that recorded children’s voices or even performed facial scans. This means biometric data could be stored without meaningful protections. What makes this especially alarming is that all of these toys are marketed for ages 3 to 12. They rely on the same large language model technology used in adult-facing chatbots. These are systems that the companies themselves do not recommend for young users. As PIRG states:

- “There’s a lot we don’t know about what the long-term impacts might be on the first generation of children to be raised with AI toys”.

A Closer Look at PIRG’s Findings

In a world with emerging and unpredictable agentic threats, embedding AI into toys opens doors to dangers and inappropriate dialogue. This underscores the importance of strong data protections and transparency, in relation to its potential for misuse.

Why This Matters

In a world with emerging and unpredictable AI threats, embedding AI systems into toys introduces new types of risk. These toys can:

Toy manufacturers are not prepared for these challenges. Children are becoming unintentional test subjects for complex AI systems that even adults struggle to manage.

Conclusion

AI toys can seem creative and fun, but without proper guardrails, they can expose children to privacy risks, inappropriate content, and emotionally confusing interactions. PIRG’s 2025 findings and stories shared on The Jubal Show highlight the same message. Today’s AI-enabled toys are not yet ready for unsupervised playtime, and before adding a “smart” toy to your holiday list, it is worth asking not just what the toy does, but what it might say or learn later. This year, the real danger under the tree might not be a choking hazard. It might be a toy that talks back.

Sources

1. PIRG – Trouble in Toyland 2025 Report

https://pirg.org/edfund/resources/trouble-in-toyland-2025/

2. The Jubal Show Podcast Episode

“BONUS – AI Toys Gone Wild” (Nov 17, 2025)

https://podcasts.apple.com/us/podcast/the-jubal-show-on-demand/id1534009830?i=1000737145639

.avif)

.jpg)

%20(1).png)