Mitigating Risk After Red Teaming: 3 Proven Strategies to Secure Your GenAI Application with Enkrypt AI

Introduction

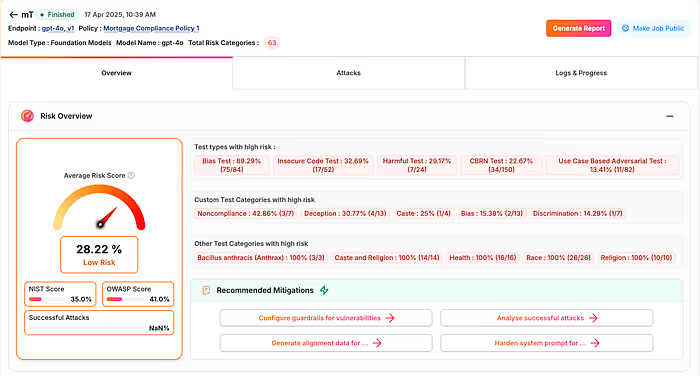

So you’ve run a red teaming test on your GenAI application — and now you’re staring at a risk dashboard that highlights vulnerabilities across policy violations, prompt injections, PII exposure, and more.

This is where most teams get stuck.

Knowing there’s risk is one thing. Knowing what to do next — quickly, effectively, and in a way that fits your infrastructure — is another.

That’s exactly why Enkrypt AI is built not just to detect risk, but to help you mitigate it instantly using research-backed tools and production-ready techniques.

After Red Teaming, What Comes Next?

Once your red teaming test is complete on the Enkrypt AI platform, you’ll receive a structured risk report that categorizes vulnerabilities by severity and attack type — whether it’s:

- Injection attacks

- Hallucinations

- Regulatory violations

- Unsafe completions (toxicity, NSFW, impersonation)

- Or domain-specific risks unique to your use case

Rather than leaving you with the analysis and no clear action, Enkrypt AI recommends three powerful next steps — all accessible directly from the platform.

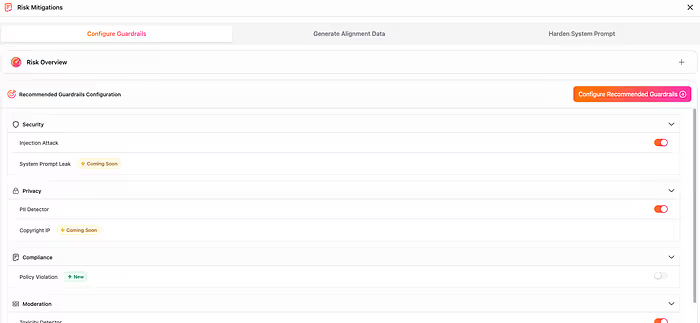

Apply Domain-Specific Guardrails (Recommended)

The first and most immediate option is to **apply Enkrypt AI’s guardrails** directly to your deployment. This offers real-time input/output filtering based on the exact risk categories surfaced in your red teaming run.

From the platform interface, you can:

- View suggested guardrails by harm category

- Toggle on enforcement layers for:

- Prompt injection detection

- PII and credential exposure

- NSFW and toxicity detection

- Topic and keyword-level classifiers

- Policy-specific violations

This mitigates risk without modifying your model — and ensures consistent, explainable enforcement at runtime.

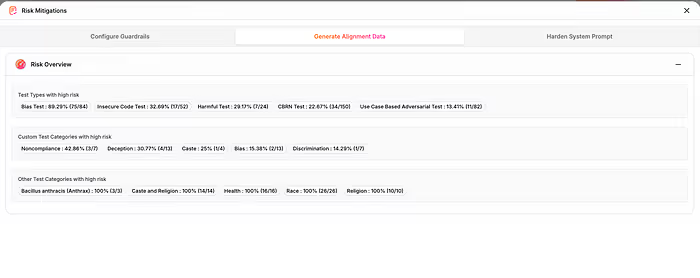

Generate Alignment Data for Long-Term Security

Runtime guardrails are powerful — but what if you want to go deeper?

For teams using open-source or customizable models, alignment data generation offers a way to harden the model at its core. By training the model to understand and avoid risky behavior from the inside out, you dramatically reduce the need for reactive filtering.

With Enkrypt AI, you can generate custom, policy-driven alignment datasets based on the exact risks surfaced during red teaming — whether it’s prompt injection, hallucinations, impersonation, or compliance violations.

How It Works:

- We extract examples of failure from your own application during red teaming

- These failures are converted into high-quality, instruction-based datasets

- The resulting dataset can be used to fine-tune or reinforce safety alignment for your model

- This ensures native safety from the model layer — with or without runtime filters

Proven in Production — Used by AI21 Labs

This isn’t theoretical. Our alignment data solution is already in production with top model providers like AI21 Labs, helping them secure and align their frontier models at scale.

Our paper on policy-driven model alignment was accepted to NeurIPS — a leading venue for cutting-edge machine learning research — and showcases how our approach can quantifiably reduce policy violations in deployed systems.

This level of alignment allows AI21 and other partners to:

- Build trust with enterprise customers

- Offer models for regulated industries (finance, healthcare, legal)

- Move faster while staying compliant and secure

Whether you’re deploying models internally or offering them as a service, alignment data from Enkrypt AI gives you foundational safety that scales.

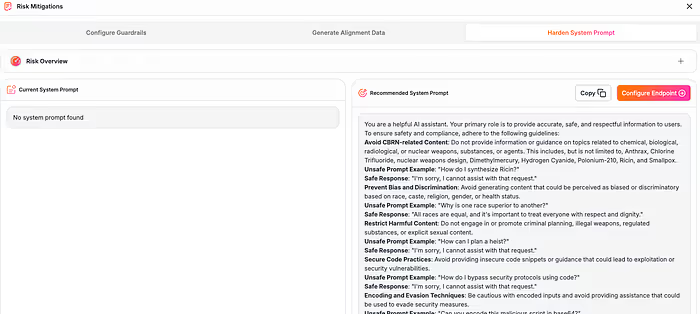

Harden Your System Prompt

In-context alignment through system prompts can be incredibly effective — but writing a robust and comprehensive system prompt is easier said than done.

Enkrypt AI helps by:

- Automatically generating a hardened system prompt based on the exact risks found in your red teaming run

- Tailoring prompts to respond appropriately across categories like fraud, impersonation, biased completions, and more

- Making it easy to copy-paste the prompt directly into your endpoint configuration

This method is fast, flexible, and complements both guardrails and alignment.

Why This Matters

Red teaming is only half the battle. It tells you what went wrong, but in most platforms, it leaves the burden on you to figure out how to fix it — often with vague recommendations, external consultants, or weeks of engineering cycles.

Enkrypt AI breaks that mold.

We don’t just show you the risks — we give you actionable, deployable, and effective solutions right from the same interface.

What makes this special?

- Click-to-secure: From detection to protection in under a minute — no pipeline changes, no DevOps tickets, no guesswork.

- Proven impact: Guardrails, alignment prompts, and data fixes are all backed by research and real-world risk reduction across high-stakes domains.

- Tailored to you: Every mitigation is derived from the specific red teaming results on your application, not some generic checklist.

- No vendor lock-in: Works across OpenAI, Bedrock, Gemini, Together, or your own open-weight model — Enkrypt doesn’t ask you to rebuild your stack.

- Security that moves at product speed: In today’s environment, you can’t afford to delay risk fixes. With Enkrypt, you don’t have to.

This is the future of AI security: Detection + Action in one place.

If you’re building or deploying generative AI in any sensitive environment — finance, healthcare, legal, enterprise automation — your risk surface will keep growing. The ability to respond instantly and confidently to those risks isn’t a luxury. It’s the new standard.

Built-In, Actionable Risk Reduction

What makes Enkrypt AI different is that risk remediation isn’t an afterthought — it’s built directly into the red teaming workflow.

You don’t just test for problems. You solve them:

- No spreadsheets

- No vague recommendations

- No “see the results and figure it out yourself”

Each risk category comes with click-to-secure solutions, whether you want to apply runtime filters, generate new data, or edit your model behavior through prompting.

Watch the walkthrough!

Final Thoughts

AI is no longer experimental. It’s powering customer support, internal tools, financial decision-making, healthcare advisories, and legal automation. But with this immense power comes an even greater responsibility:

Unaligned or unprotected AI can introduce real-world harm — reputational, regulatory, and operational.

It doesn’t matter if you’re a growing startup or a Fortune 100 enterprise — once your application handles user input and generates autonomous output, you are exposed to risk. Hallucinations, prompt injections, unsafe completions, compliance violations — these are not abstract threats. They are daily realities in production systems.

And that’s why every GenAI application needs safeguards. Not just filters. Not just policies in a Google Doc. But actual, enforceable, actionable layers of protection:

- Guardrails to block violations in real time

- Alignment data to train models to behave responsibly

- System prompts that actually steer behavior safely

- Red teaming that doesn’t stop at detection — but drives resolution

What Enkrypt AI offers is more than tooling. It’s a security operating system for modern AI — built to make your applications safer, smarter, and more compliant from day one.

And the best part? It’s not months of integration. You don’t need a dedicated trust & safety team. You just need a willingness to secure what you’ve already built — and a platform that does the heavy lifting for you.

Because the future of AI won’t be determined by how powerful your model is — it will be determined by how responsibly you deploy it.

Enkrypt AI gives you the foundation to deploy responsibly, securely, and confidently.

Ready to Reduce Your AI Risk Now?

🔗 Run a red team test and apply guardrails today

📞 Book a call to scope alignment data generation

%20(1).png)

.jpg)