Scaling AI with Trust: Why Healthcare Payers Need Enkrypt AI as Their Safety, Security, and Compliance Control Plane

The Coming AI Reckoning in Healthcare Payers

Healthcare payers are entering an era where AI isn’t a pilot, it’s in production. Large language models (LLMs) and intelligent agents are now embedded across claims adjudication, utilization management, member engagement, and care analytics. The benefits are massive: lower admin costs, faster decisions, better member experience, and improved cost-of-care accuracy. But the exposure is equally large. When AI makes or influences coverage decisions, member communications, or partner workflows, the risk shifts from technical to existential. A hallucinated prior authorization denial or incorrect benefit explanation can escalate from a member complaint to a CMS audit or class-action risk. That’s why every payer must now treat trust and compliance as core features of their AI architecture.

Where Risk Hides: Internal vs. External AI Use Cases

AI risk hides in plain sight in both back-office automation and member-facing systems.

Internal Use Cases:

External Use Cases:

When AI interacts with members or providers, the stakes multiply. A single hallucinated message “this service is not covered” can trigger both member distress and regulator intervention.

The Four Pillars of Responsible Scale

Every AI deployment in healthcare must balance innovation and accountability across four guardrails: Risk, Safety, Security, and Compliance.

- Risk: Identify and score AI-specific threats—hallucinations, bias, leakage, model inversion.

- Safety: Protect members and providers from harmful outputs; enforce human review in prior authorization or benefits queries.

- Security: Shield PHI at the model layer with input/output sanitization and contextual access control.

- Compliance: Meet HIPAA, HITECH, CMS, and EU AI Act obligations through continuous monitoring and audit trails.

Enkrypt AI: The Control Plane for Safe AI

Traditional controls stop at data and network perimeters. Enkrypt AI operates in the intelligence layer, where the real AI risks emerge. It acts as a control plane that governs how every agent, prompt, and model behaves ensuring trust, safety, and auditability are enforced at runtime.

Key Capabilities:

- Input/Output Filtering: Prevents prompt injection, data leakage, and non-compliant completions.

- Policy Engine: Translates payer policies into enforceable runtime logic.

- Red-Teaming Simulation: Continuously stress-tests AI for adversarial inputs and bias.

- Audit & Traceability: Maintains immutable logs for internal and external audits.

- Multi-Layer Security: Integrates with IAM, SOC2, and HIPAA controls across cloud environments.

For prior authorization, Enkrypt’s policy engine can:

- Enforce “human-in-the-loop required” rules for borderline cases.

- Validate model recommendations against regulatory coverage criteria.

- Prevent agents from denying authorization unless verified by a licensed reviewer.

For member benefits, it can:

- Detect and block inaccurate or non-compliant explanations.

- Filter prohibited disclosures of PHI.

- Record every interaction for downstream audit — a crucial safeguard when CMS oversight is involved.

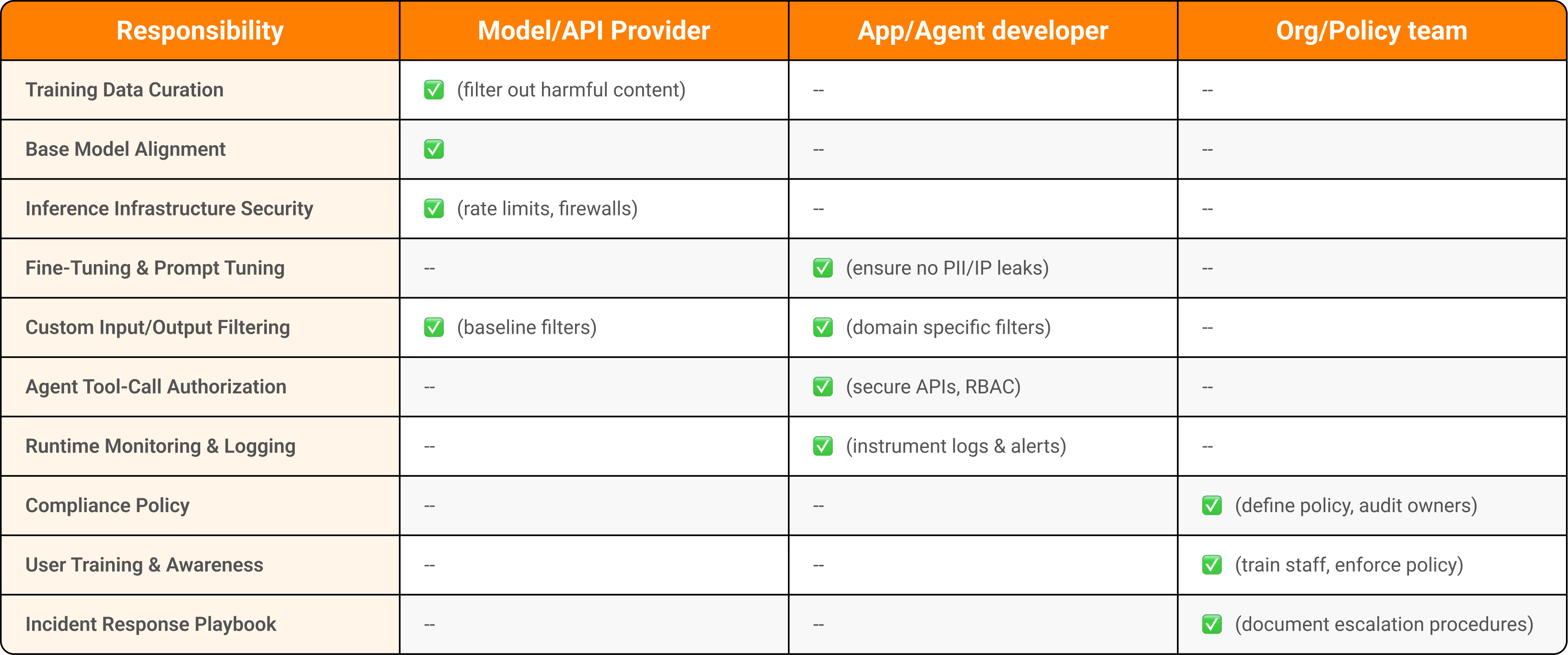

The Shared Responsibility Model: Clarifying Accountability

Payers often ask: “Who’s accountable when an AI model goes wrong?”

Enkrypt answers that with its Shared Responsibility Model, a framework that clearly defines ownership across the AI lifecycle:

“Responsibility is shared; accountability always lands with the enterprise.” — Enkrypt AI

In prior authorization workflows, for example:

- The foundation model may interpret requests.

- The agent applies payer rules.

- But the payer organization is accountable for ensuring human review and compliance logs exist.

This model ensures that even as AI agents scale, accountability remains traceable and defensible.

Figure 1: A mapping of who “owns” each core task, from data curation through user training.

From Compliance Burden to Competitive Differentiation

Payers that view compliance as bureaucracy will stall. Those who make it programmable will lead.

- Regulators reward transparency.

- Providers value fairness in authorization and contracting.

- Members reward accuracy and clarity.

- Boards prize operational resilience.

With Enkrypt AI, compliance becomes an accelerator, not an anchor. It reduces risk while enabling faster rollout of sensitive workflows like prior auth and benefits explanation under full visibility and control.

The Architecture of Trust

A simple, scalable structure:

- AI Systems Layer: Models and agents embedded in claims, benefits, and care.

- Enkrypt AI Control Plane: Policy engine, red-teaming, runtime safety enforcement.

- Governance Layer: Compliance dashboards, audits, and regulatory alignment (HIPAA, CMS, EU AI Act).

This closed-loop architecture — detect → enforce → audit → improve — is how trust is operationalized in AI-driven healthcare systems.

Seven Steps to De-Risk and Scale AI

- Inventory AI Workflows — especially prior authorization and member-facing agents.

- Classify Risk by Sensitivity — clinical vs. operational impact.

- Embed Enkrypt Guardrails for all high-risk functions.

- Codify Governance Rules as machine-enforceable policies.

- Run Continuous Red-Teaming before each model update.

- Automate Compliance Evidence for HIPAA/CMS alignment.

- Create a Trust Council to review outcomes quarterly.

Regulators are no longer asking “Do you use AI?”, they’re asking, “Can you prove it’s safe, fair, and compliant?”. With Enkrypt AI, you can.

Summary

AI’s promise in healthcare is too great to leave to chance. But scaling without governance is scaling without guardrails. Enkrypt AI turns compliance into code, trust into architecture, and safety into strategy For healthcare payers, it’s not just a security layer, it’s the operating system for responsible scale.

%20(1).png)

.jpg)