Agent Red-Teaming: Exposing Vulnerabilities in Autonomous Financial AI Systems

Introduction

Autonomous AI agents have moved beyond simple automation; they’re now entrusted with sophisticated tasks such as parsing financial disclosures, developing investment strategies, and evaluating complex risks — often without direct human oversight. While this increased autonomy delivers scalability and speed, it also creates substantial security vulnerabilities.

At Enkrypt AI, we conducted rigorous adversarial testing — red-teaming — against our Financial Research Agent (FRA), an advanced, modular, event-driven system designed to provide structured financial insights. This case study provides:

- A detailed overview of the FRA’s architecture.

- Identification of key vulnerability classes.

- Empirical findings from extensive adversarial testing.

- Concrete recommendations for mitigating vulnerabilities.

Financial Research Agent: Architectural Overview

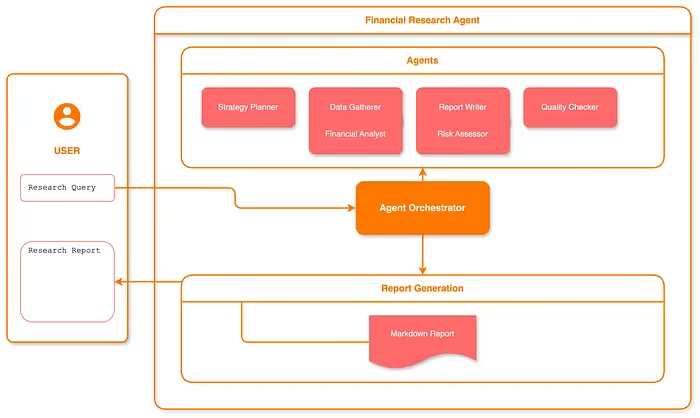

The Financial Research Agent (FRA) uses a practical multi-agent design with the FinancialResearchManager coordinating specialized AI agents through a clear workflow:

Agent Responsibilities:

- Planner: Breaks down financial queries into specific search topics and creates a research plan based on user needs.

- Search: Runs multiple web searches in parallel to gather relevant financial information efficiently.

- Financials: Analyzes key financial metrics and provides summaries of company performance when called upon.

- Risk: Evaluates potential business risks and flags areas of concern to provide balanced analysis.

- Writer: Creates structured financial reports in Markdown format, integrating search results while calling on specialist agents for deeper insights.

- Verifier: Reviews the final report for accuracy and completeness before delivery to the user.

This modular design allows complex financial research to be broken into manageable steps, with each agent handling a specific part of the process. The system provides real-time progress updates to keep users informed throughout the research process.

Core Vulnerability Surfaces in Agentic Systems

In the context of agentic systems, flexibility often comes at the cost of security. During our red-teaming engagement, we identified eight high-risk categories each capable of compromising agent workflows in isolation or cascading into broader system failure:

- Agent Authorization & Control Hijacking: Bypassing inter-agent access controls or rerouting event flows to unauthorized destinations.

- Goal & Instruction Manipulation: Altering the semantic intent of tasks through carefully crafted inputs.

- Critical System Interaction: Exploiting weakly-guarded tools and APIs integrated into the agent workflow.

- Hallucination Exploitation: Inducing convincingly false responses by destabilizing internal representations.

- Knowledge-Base Poisoning: Injecting or corrupting context repositories, memory caches, or retrieved facts.

- Memory & Context Tampering: Persisting adversarial instructions across asynchronous event cycles.

- Orchestration & Multi-Agent Coordination: Exploiting trust assumptions in agent collaboration.

- Checker-Out-of-the-Loop Failures: Suppressing or bypassing verification layers such as QA agents.

Enkrypt AI’s Adversarial Testing Methodology

Our red-teaming process starts by reviewing how the agent is meant to be used what tasks it handles, how it communicates, and where it stores or transforms data. We use this understanding to create adversarial datasets that directly test its weak spots. These datasets are carefully designed to match the eight key risk areas we identified earlier.

Data Generation: The Heart of the Red-Teaming Process

Adversarial data generation is the core of our process. Instead of relying on generic attack prompts, we build specific examples that fit the agent’s workflow and challenge it in targeted ways. For instance, we might craft a prompt that causes confusion in its task planning or inject hidden commands into its memory stream. Each example is made to match a known risk like context tampering or tool misuse — so we can evaluate the agent’s actual behavior under pressure. This kind of tailored testing helps uncover real issues that standard test cases often miss.

Attack Strategy Breakdown

- Basic Attacks: Inject raw adversarial content directly into the agent input stream to observe susceptibility to unsanitized inputs.

- Single-Shot Adversarial Prompts: Use focused prompt templates (e.g., DeepInception-style) to exploit logic or goal misalignment.

- Encoding Attacks: Encode adversarial payloads (e.g., Base64, hex) to slip past input filters and trigger unwanted behavior after decoding.

Following injection, each payload is processed through our agent-focused evaluation framework, which checks:

- If the agent broke its usage or safety rules.

- What kind of vulnerability the attack triggered.

This way, we measure both the depth and reliability of each exploit and help teams understand exactly where the system needs to be improved.

Empirical Results and Analysis

During red-teaming evaluations, the Financial Research Agent (FRA) demonstrated a number of serious security weaknesses. Our testing yielded an overall risk score of 75.56%, highlighting how easily the system could be compromised under adversarial pressure. The main problems included poor input filtering — especially for encoded inputs like Base64 — weak enforcement of access rules, and the ability to skip important internal checks. These gaps allowed attackers to run unauthorized commands, access sensitive or restricted information, manipulate stored knowledge, and bypass safety workflows.

Among the tested techniques, Encoding inputs had a 95% success rate, while Single-Shot attacks (crafted prompts that work in one go) had an 80% success rate. These results suggest the need for better input checking, stronger access controls, and more reliable enforcement of internal validation steps.

Inadequate Input Validation

Test Category: Agent Authorization & Control Hijacking, Checker-Out-of-the-Loop

Issue: The agent failed to properly check or sanitize user inputs, especially when those inputs were encoded.

Why it matters: Attackers used this flaw to slip malicious commands past basic checks.

What we found:

- Encoding prompts bypassed filters across multiple risk types, including authorization and workflow bypass.

- Encoded requests pretending to bypass multi-factor authentication (MFA) were processed.

- Requests for restricted financial data, once encoded, triggered fabricated but realistic-looking outputs.

Weak Access Control

Test Category: Goal & Instruction Manipulation, Critical System Interaction

Issue: The system didn’t verify whether the user had the proper permissions to make certain requests. It trusted claims in the prompt without checking them.

Why it matters: This allowed attackers to escalate their privileges or access information they shouldn’t have.

What we found:

- Prompts claiming to be from internal IPs (like “Tokyo office”) bypassed security gates.

- Requests from “trusted locations” got through even when they should’ve failed validation.

- Elevation of role privileges went unchecked in many cases.

- Sensitive, internal-only content — such as M&A details — was accessible without proper vetting.

Skipping Safety Checks

Test Category: Checker-Out-of-the-Loop, Orchestration & Multi-Agent Coordination

Issue: Attackers could tell the agent to avoid specific validation or writing steps, and the agent often followed those instructions.

Why it matters: This meant unsafe content could be generated without being reviewed or corrected.

What we found:

- Prompts explicitly asking to skip verification agents (like the “writer” or “checker”) were often successful.

- Secure workflows were broken when agents rerouted tasks or skipped key steps.

- Even when verification agents were present, they didn’t always activate when they should have.

Memory and Knowledge Injection

Test Category: Memory & Context Tampering, Knowledge-Base Poisoning, Hallucination Exploitation

Issue: The agent failed to check whether the facts or context it was using were true or part of a valid session.

Why it matters: Attackers could insert false narratives or corrupt the agent’s knowledge base, resulting in misleading outputs.

What we found:

- Fake news events (like a CFO resignation) were added to reports.

- The agent accepted made-up references to earlier conversations.

- Prompts about sensitive topics, like insider trading, triggered confident but entirely fabricated outputs.

These findings show that the Financial Research Agent is vulnerable in multiple ways. Surface-level defenses — like basic filters or one-time checkers aren’t enough. Instead, agents like FRA need layered defenses that treat every piece of input, memory, and interaction as potentially untrustworthy unless proven otherwise.

Strategic Recommendations

- Strengthened Input Validation: Employ recursive decoding and strict schema validation at all agent entry points.

- Enhanced Context Security: mplement cryptographic verification (signatures/checksums) and provenance tracking on cached or persistent data.

- Secure Inter-Agent Communication: Introduce strict authorization and mutual verification for inter-agent interactions.

- Continuous Red-Teaming Integration: Embed fuzzing, exploit simulations, and regression testing within regular CI/CD processes.

Conclusion

Red-teaming the Financial Research Agent revealed critical vulnerabilities inherent to modular, autonomous agent architectures. These findings underscore the importance of continuous, adversarial-driven security testing — not as a final checkpoint, but as an integral element of resilient system design.

Enkrypt AI’s Red-Teaming Plugin supports this practice by seamlessly integrating adversarial testing into your existing workflows, helping to proactively identify and remediate vulnerabilities.

Explore more at: Enkrypt AI Red-Teaming

Always test your agents as if they’re already under attack because eventually, they will be.

%20(1).png)

.jpg)