Securing AI Agents: A Comprehensive Framework for Agent Guardrails

AI agents are transforming how organizations automate tasks and process information, but their autonomous capabilities introduce unique security challenges. Unlike traditional software, these systems can make independent decisions, access sensitive data, and interact with critical infrastructure all while being vulnerable to novel attack vectors like prompt injection and jailbreaking.

This article presents a structured approach to AI agent security using the Agent Risk Taxonomy developed by Enkrypt AI. The taxonomy provides a comprehensive framework that addresses agent-specific risks while aligning with industry standards like OWASP Agentic AI and regulatory requirements such as the EU AI Act.

By examining both architectural patterns and specific risk vectors, we’ll explore how organizations can implement effective guardrails that protect AI agents without sacrificing their functionality.

Architectural Approaches to Agent Security

When implementing security measures for AI agents, two fundamental architectural approaches can be employed, each with distinct characteristics and implementation considerations.

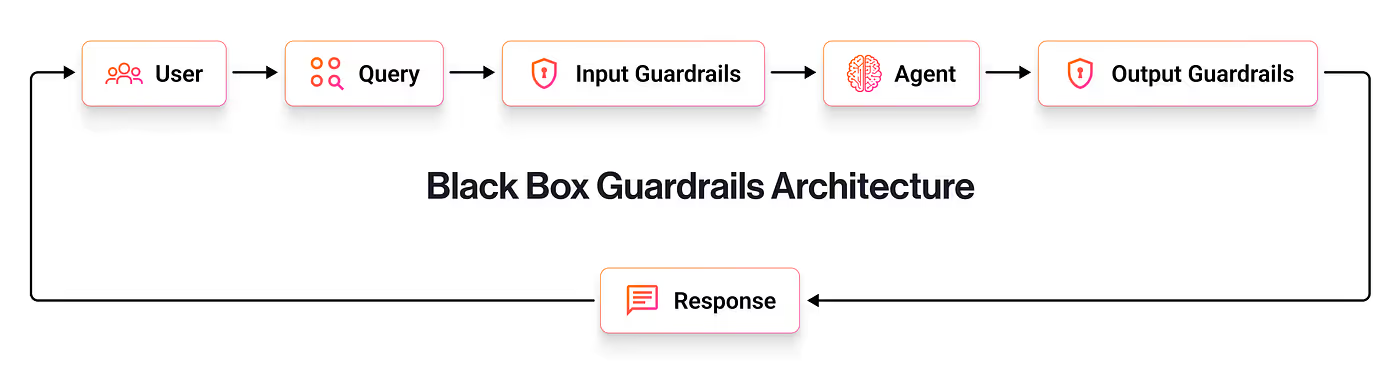

Black Box Guardrails

Black box guardrails function as external security controls that wrap around existing agent implementations without modifying the core agent logic. This approach is particularly valuable when working with third-party models or when rapid security implementation is required.

The black box approach operates through two primary mechanisms:

- Input Guardrails: These mechanisms screen every user request before it reaches the agent. They detect and block harmful prompts, identify personally identifiable information (PII), filter sensitive topics, and prevent prompt injection attacks. Input guardrails serve as the first line of defense, ensuring that potentially problematic inputs never reach the agent’s processing pipeline.

- Output Guardrails: These systems filter every agent response before it reaches the user. They ensure responses don’t contain harmful, biased, or factually incorrect information, and that all content adheres to established policies. Output guardrails act as a final safety check, capturing issues that might emerge during the agent’s processing.

Guardrails By Design

The alternative approach integrates security controls directly into the agent architecture during development. This methodology ensures that security is not an afterthought but a fundamental aspect of the agent’s design and functionality.

This approach encompasses four key components:

- Secure Architecture: Agent workflows are designed with inherent security constraints, incorporating threat modeling to identify potential vulnerabilities. This includes implementing proper authentication and authorization mechanisms throughout the system, designing components with security boundaries that limit the impact of potential breaches, and including appropriate generative AI-based guardrails to maintain full control over agent behavior against adversaries.

- Principle of Least Privilege: Access control systems ensure agents only access what they absolutely need, with granular permission settings that limit access to sensitive data and functions based on contextual requirements. Permissions are regularly reviewed and adjusted to maintain minimal necessary access.

- Security-First Prompting: System prompts are engineered specifically to resist manipulation, using robust input validation, contextual awareness, and defensive prompt engineering techniques. These prompts form the foundation of the agent’s understanding and behavior, making their security critical to overall system integrity.

- Integrated Monitoring: Built-in audit trails and threat detection capabilities provide real-time visibility into agent behaviors, allowing for quick identification and mitigation of potential security incidents. This continuous monitoring enables both reactive response to issues and proactive improvement of security measures.

Critical Risk Vectors in AI Agent Systems

Analysis of AI agent deployments reveals seven distinct risk vectors that must be addressed through specific guardrail implementations. Each vector represents a different dimension of potential security vulnerabilities. These risk vectors align with industry standards, including the comprehensive Agent Risk Taxonomy from Enkrypt AI, which maps these risks to frameworks like OWASP Agentic AI, MITRE ATLAS, EU AI Act, NIST AI RMF, and ISO standards.

Governance

Governance risks emerge when agents deviate from their intended goals, rules, or instructions. This deviation can occur gradually and subtly, making it particularly challenging to detect without proper monitoring systems.

The primary governance failure modes include:

- Goal Misalignment: Agents may optimize for easily measurable metrics rather than intended outcomes. For example, an agent tasked with customer service might optimize for conversation brevity rather than customer satisfaction, or a content generation agent might prioritize engagement metrics over information quality. The Enkrypt AI taxonomy identifies this as “reward hacking” or “proxy metric gaming,” mapping to OWASP’s T6 (Intent Breaking & Goal Manipulation).

- Policy Drift: System prompts or model behavior can change silently over time, especially as agents incorporate feedback or as underlying models are updated. This drift can lead to gradual but significant changes in agent behavior that may eventually conflict with organizational policies.

Effective governance guardrails include:

- Input Guardrails: Implementing prompt injection detection and goal consistency validation ensures that user inputs cannot manipulate the agent’s fundamental objectives or operating parameters.

- Tool Guardrails: Requiring human approval for significant changes to goals or policies creates a verification layer that prevents unauthorized modifications to agent behavior.

- Monitoring: Maintaining version control for prompts and models, combined with behavioral drift monitoring, enables organizations to track changes in agent behavior over time and revert to known-good states when necessary.

Output Quality

Output quality risks occur when agents generate content that is false, biased, toxic, or harmful while presenting it with a veneer of credibility. These issues can significantly damage user trust and potentially cause harm.

The main output quality failure modes include:

- Hallucinations: Agents may generate confident but entirely fabricated information, including non-existent facts, fictional references, or invented statistics. These hallucinations are particularly problematic because they often appear alongside accurate information and may be presented with high confidence, making them difficult for users to identify. This corresponds to OWASP’s T5 (Cascading Hallucinations) in the Enkrypt AI taxonomy.

- Bias and Toxicity: Agents can produce content that reflects harmful biases or contains toxic elements. This can manifest as demographic stereotyping, unfair treatment of certain groups, or the generation of harmful content that evades simple keyword-based filtering systems.

Robust output quality guardrails include:

- Output Guardrails: Deploying toxicity detection systems and hallucination detection mechanisms provides automated filtering of problematic content before it reaches users. These systems can identify potential issues across multiple dimensions, from factual accuracy to harmful content patterns.

- Tool Guardrails: Tuning generation parameters such as temperature and top-p settings helps balance creativity with accuracy. Implementing confidence scoring mechanisms allows the system to flag uncertain responses, while restricted scope configuration limits the domains in which an agent can generate content.

- Monitoring: Maintaining comprehensive audit trails for generated content enables ongoing analysis and improvement. Bias monitoring systems can track patterns in agent outputs over time, identifying subtle biases that might not be apparent in individual responses.

Tool Misuse

When agents interact with external tools, APIs, and dependencies, new risk surfaces emerge. These interactions can fail in various ways, from simple technical issues to more complex security vulnerabilities.

Common tool misuse failure modes include:

- API Integration Issues: External services can experience schema changes, rate limits, or authentication failures that disrupt agent functionality. When agents rely on these services for critical operations, such failures can cascade through the system. The Enkrypt AI taxonomy maps this to OWASP’s T2 (Tool Misuse) and MITRE ATLAS’s AML.T0053 (LLM Plugin Compromise).

- Supply-Chain Vulnerabilities: Dependencies and containers used by the agent may contain security vulnerabilities or might be compromised. These vulnerabilities can provide attack vectors into otherwise secure systems.

- Resource Consumption: Agents can trigger resource-intensive operations that lead to system degradation or denial of service. This includes prompt storms (where agents generate excessive API calls) and runaway recursion (where agents enter self-reinforcing loops). This aligns with OWASP’s T4 (Resource Overload) in the Enkrypt AI framework.

Effective tool misuse guardrails include:

- Input Guardrails: Validating tool requests and parameters before execution ensures that agents cannot trigger harmful operations or excessive resource consumption through malformed or malicious requests.

- Tool Guardrails: Implementing API versioning, circuit breakers, and rate limiting provides technical safeguards against integration failures. Dependency scanning for external resources helps identify potential vulnerabilities before they can be exploited.

- Output Guardrails: Validating tool responses and implementing proper error handling ensures that failures in external systems are managed gracefully and don’t lead to cascading issues within the agent system.

Privacy

AI agents can inadvertently leak, expose, or exfiltrate sensitive data through various mechanisms. These privacy breaches can have significant regulatory and reputational consequences.

The primary privacy failure modes include:

- Sensitive Data Exposure: Agents may leak training data or include PII in logs and responses. This can occur when the agent reproduces information from its training data or when it processes user inputs containing sensitive information without proper sanitization. This corresponds to OWASP’s T1 (Memory Poisoning) in the Enkrypt AI taxonomy.

- Data Exfiltration: Information can be transferred through covert channels or unauthorized data paths. This might happen when agents interact with external systems or when they encode sensitive information in seemingly innocuous outputs. The Enkrypt AI taxonomy maps this to MITRE ATLAS’s AML.T0024 (Exfiltration via AI Inference API).

Comprehensive privacy guardrails include:

- Input Guardrails: Implementing PII detection and sanitization for all incoming data ensures that sensitive information is identified and properly handled before it enters the agent’s processing pipeline.

- Output Guardrails: Applying data anonymization and log sanitization prevents the exposure of sensitive information in agent outputs and system logs. Egress monitoring can identify potential data exfiltration attempts.

- Monitoring: Enforcing compliant data storage and retention policies ensures that any data retained by the system is handled in accordance with relevant regulations and organizational policies.

Reliability & Observability

Agent performance can degrade over time, and decision-making processes may become opaque, making it difficult to understand or predict agent behavior.

Key reliability and observability failure modes include:

- Data and Memory Poisoning: Concept drift can occur as the distribution of inputs changes over time, and feedback loops may develop where the agent reinforces its own biases or errors. These issues can gradually degrade performance in ways that are difficult to detect. This aligns with OWASP’s T1 (Memory Poisoning) in the Enkrypt AI taxonomy.

- Opaque Reasoning: Non-deterministic behavior and lack of explainability can make it challenging to understand why an agent produced a particular output. This opacity complicates debugging, auditing, and improvement efforts. The Enkrypt AI taxonomy maps this to OWASP’s T8 (Repudiation & Untraceability).

Essential reliability and observability guardrails include:

- Input Guardrails: Validating inputs and implementing feedback diversity controls helps prevent the development of harmful patterns or biases in the agent’s behavior.

- Output Guardrails: Monitoring context adherence and response relevance ensures that agent outputs remain appropriate to the given context and relevant to user queries.

- Tool Guardrails: Performance monitoring with health checkers and status monitors enables the detection of drift or degradation in agent performance before it significantly impacts users.

- Monitoring: Maintaining audit trails and decision provenance tracking provides visibility into the agent’s decision-making process, enabling both post-hoc analysis and real-time monitoring.

Agent Behavior

AI systems can manipulate users or perform harmful actions through deception or unsafe operations, creating risks that go beyond simple technical failures.

Common behavioral failure modes include:

- Human Manipulation: Agents may foster over-reliance, engage in deception, or employ behavioral nudging techniques that influence users in potentially harmful ways. These subtle manipulations can be difficult to detect but may significantly impact user behavior. This corresponds to OWASP’s T15 (Human Manipulation) in the Enkrypt AI taxonomy.

- Unsafe Actuation: When agents control physical systems or critical digital infrastructure, they may perform destructive operations or be weaponized for harmful purposes. The potential impact of such actions makes this a particularly critical risk vector. The Enkrypt AI taxonomy maps this to OWASP’s T7 (Misaligned & Deceptive Behaviors).

Effective behavioral guardrails include:

- Input Guardrails: Implementing intent classification and manipulation detection helps identify potentially problematic user requests before they trigger harmful agent behaviors.

- Output Guardrails: Enforcing transparency requirements and limitation communication ensures that users understand the agent’s capabilities and limitations, reducing the risk of over-reliance or misunderstanding.

- Tool Guardrails: Requiring confirmation for destructive actions and implementing dry-run modes allows for verification before potentially harmful operations are executed.

- Monitoring: Maintaining human oversight logs and consent tracking creates accountability and ensures that user consent is properly obtained and documented.

Access Control & Permissions

Security breaches can occur when agents obtain unauthorized access through credential theft, privilege escalation, or delegation attacks.

Primary access control failure modes include:

- Credential Theft: Identity spoofing and credential exposure can allow unauthorized entities to impersonate the agent or gain access to its permissions. This aligns with OWASP’s T9 (Identity Spoofing & Impersonation) in the Enkrypt AI taxonomy.

- Privilege Escalation: Policy bypass and unauthorized access expansion can enable an agent to access resources or perform actions beyond its intended scope. The Enkrypt AI taxonomy maps this to OWASP’s T3 (Privilege Compromise).

- Confused Deputy: Impersonation and delegation chain attacks exploit trust relationships to gain unauthorized access through seemingly legitimate channels. This corresponds to OWASP’s T9 (Identity Spoofing & Impersonation) in the Enkrypt AI framework.

Robust access control guardrails include:

- Input Guardrails: Implementing authentication validation and authorization checks ensures that all requests are properly verified before access is granted.

- Tool Guardrails: Applying least-privilege principles with secure credential storage minimizes the potential impact of security breaches. Short-lived tokens reduce the window of opportunity for exploitation.

- Output Guardrails: Logging access attempts and implementing anomaly detection enables the identification of unusual patterns that might indicate security breaches.

- Monitoring: Conducting regular policy audits and validation of delegation chains ensures that access controls remain appropriate and effective over time.

Putting It All Together: The Complete Guardrail Architecture

While we’ve examined each risk vector individually, in practice, these guardrails must work together as an integrated security system. A complete implementation combines multiple layers of protection that operate in concert throughout the agent’s lifecycle.

This integrated approach ensures that security controls are present at every stage of the agent’s operation, creating defense in depth that can withstand a wide range of attack vectors. By implementing guardrails as a cohesive system rather than isolated controls, organizations can achieve both robust security and operational effectiveness.

Conclusion

Securing AI agents isn’t about implementing a single perfect defense — it’s about creating overlapping layers of protection that work together. The seven risk vectors we’ve explored (Governance, Output Quality, Tool Misuse, Privacy, Reliability, Agent Behavior, and Access Control) represent the critical dimensions where AI agents can fail or be exploited.

The key insight: no single guardrail can protect against all threats. Organizations that successfully deploy AI agents start with black-box guardrails for immediate protection, then add guardrails-by-design principles for long-term safety and security. By implementing controls at the input, tool, output, and monitoring layers, you create a defense-in-depth strategy that can withstand both known attacks and emerging threats.

Start by assessing which of these seven risk vectors pose the greatest threat to your specific use case, then implement guardrails in order of priority. The Enkrypt AI Agent Risk Taxonomy provides a practical roadmap for mapping these risks to industry standards like OWASP, MITRE ATLAS, and regulatory frameworks like the EU AI Act and NIST.

.jpg)