Enterprise AI Security Framework 2025: Securing LLMs, RAG, and Agentic AI

AI is now at the center of enterprise growth—but with opportunity comes an entirely new threat surface. Naturally, The big question on everyone’s mind is:

How do we secure Large Language Models (LLMs), Retrieval-Augmented Generation (RAG), and agent-based AI systems while meeting new compliance deadlines?

Before we find the exact answer, let's cover the main AI risks, practical defenses, important compliance rules, and a clear 90-day plan to help secure AI systems in a way that’s easy to follow and ready for audits.

What Enterprise AI Security Now Means

Enterprise AI security extends beyond application protection. It requires a layered defense covering governance, runtime, and compliance:

- Models & Prompts: Prevent jailbreaks, hallucinations, and unsafe outputs.

- Data & Retrieval: Secure RAG corpora, PII, and vector DBs with provenance checks.

- Tools & Agents: Control runtime permissions, enforce approvals, and sandbox risky actions.

- Operations: Bake AI security into CI/CD workflows, monitoring, red teaming, and audit evidence.

This approach blends NIST AI Risk Management Framework (AI RMF) functions (Govern, Map, Measure, Manage) with real-world engineering guardrails and compliance-ready documentation.

Top AI Security Threats in 2025

LLM & RAG Security Risks

- Prompt Injection & Jailbreaks – Malicious prompts manipulate AI into leaking sensitive data.

- Insecure Output Handling – Downstream apps accept risky outputs as safe.

- Training Data Poisoning – Adversaries plant malicious content into corpora or training sources.

Use the OWASP Top 10 for LLM Applications as your baseline. Explore more at LLM Safety LeaderBoard.

RAG Security Challenges and Defenses

- Retrieval Poisoning – Small, unnoticed manipulations in knowledge bases mislead AI outputs.

- Control: Apply provenance tracking, integrity validation, and treat RAG corpora as critical infrastructure.

Agentic AI Security Risks

- Over-Permissioned Actions – Agents can escalate permissions, execute unsafe code, or trigger SSRF.

- Mitigation: Apply least-privilege, sandboxing, intent verification, rate limiting, and kill switches.

For adversary behaviors, review MITRE ATLAS techniques for AI threat modeling.

For a detailed breakdown of risks associated with AI agents and autonomous behaviors, see comprehensive agent risk taxonomy.

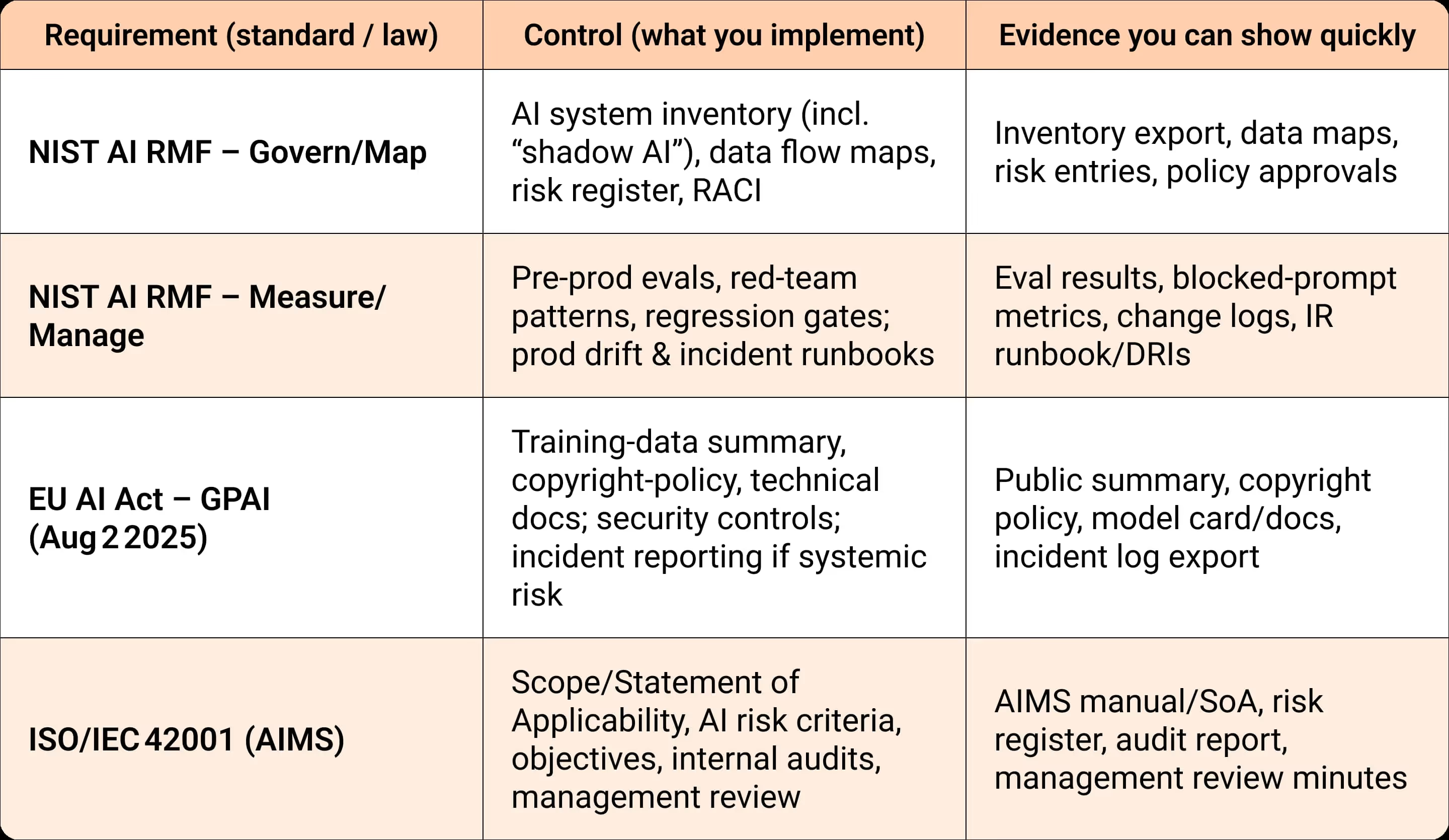

AI Compliance & Regulatory Standards 2025

2025 is the enforcement year for AI compliance:

- NIST AI RMF: Organize programs under Govern, Map, Measure, Manage.

- EU AI Act (effective August 2, 2025): Requires transparency, documentation, copyright compliance, incident readiness, and cyber obligations.

- ISO/IEC 42001 (AI Management Systems): Extends ISO 27001 for AI governance, policies, risk processes, and audits.

Action point: Every compliance requirement → mapped to a control → backed by evidence packs.

AI Security Reference Architectures

LLM & RAG Apps

- Data classification, masking, encryption, and attribute-based access controls (ABAC).

- Retrieval policies: allow/deny lists, provenance tracking, poisoning detection.

- Input/output guardrails against prompt injection.

- Real-time observability: logs, redaction, drift detection.

Agentic AI Runtime

- Scoped API tokens and RBAC/ABAC.

- Intent checks + human approvals for sensitive actions.

- Sandboxed execution and circuit breakers (kill-switches).

- Detailed audit trails linking prompts, data sources, and tool calls.

Explore comprehensive agent runtime policies available through agentic AI runtime controls.

Non-Negotiable AI Security Controls You Must Deploy

- Identity & Access: RBAC/ABAC for models, corpora, and APIs.

- Guardrails: OWASP-aligned defenses against jailbreaks and unsafe outputs.

- Red Teaming: AI-specific test sets in CI/CD pipelines.

- RAG Hardening: Provenance monitoring, poisoning checks, sensitive data segmentation.

- Monitoring: Automated incident detection and response playbooks.

- Evidence Packs: Logs, evaluations, dashboards mapped to NIST/EU/ISO requirements.

The 90-Day AI Security Implementation Plan

Days 0–30: Stabilize

- Inventory AI deployments (including “shadow AI”).

- Classify data and enable redaction/masking.

- Deploy baseline input/output guardrails.

- Launch risk registers mapped to NIST AI RMF.

Days 31–60: Harden

- Enforce ABAC across datasets, corpora, and tools.

- Integrate red teaming and regression checks into CI/CD pipelines.

- Apply provenance tracking and poisoning detection to RAG workflows.

- Define ISO/IEC 42001-scope policies.

Days 61–90: Operationalize

- Add approval workflows and sandboxing for high-risk agent actions.

- Run incident response simulations with AI-specific playbooks.

- Prepare an audit-ready evidence pack for the EU AI Act.

Enterprise Buyer’s Checklist for AI Security

When selecting AI security platforms or partners, evaluate:

- Coverage: LLMs, RAG, agentic AI.

- Guardrails: Jailbreak defense, PII filters.

- Retrieval: Provenance, poisoning detection, ABAC.

- Runtime: Scoped tokens, least-privilege, sandboxing.

- Testing: Red team scenarios, regression evaluations.

- Monitoring: Logs, drift alerts, AI observability.

- Compliance: NIST, EU AI Act, ISO alignment.

- Integrations: AWS, GCP, Azure, enterprise DBs.

- Performance: Low latency, minimal overhead.

- SLA: 24×7 support, dedicated expertise.

How Enkrypt AI Strengthens AI Security

We provides built-in guardrails, RAG security, agent runtime protections, red teaming frameworks, and compliance-aligned evidence packs. Enterprise teams can move faster with confidence knowing every control maps clearly to compliance obligations.

Enterprise AI Security FAQs

What is Enterprise AI Security?

It combines governance and runtime protections for LLMs, RAG, and agents using frameworks like NIST AI RMF and controls such as OWASP guardrails, ABAC, red teaming, and monitoring.

How do you prevent prompt injection and data leakage?

Deploy guardrails, enforce retrieval controls, add red team evaluations, and continuously monitor leakage rates.

What changed on Aug 2, 2025 (EU AI Act)?

Providers of general-purpose AI models must supply transparency documentation, copyright disclosures, and detailed cybersecurity capabilities. Deployers must maintain audit-ready evidence to satisfy regulators.

%20(1).png)

.jpg)