Securing Enterprise AI Agents: How Enkrypt AI Delivers Compliance, Guardrails, and Trust

Introduction: Why Security and Compliance Matter for AI Agents

AI agents are rapidly becoming core to enterprise operations, from powering customer service copilots to streamlining financial workflows and even supporting healthcare decision-making. Unlike traditional automation tools, these systems engage directly with sensitive business and personal data—making security, compliance, and trust foundational requirements rather than optional features.

For enterprises in regulated industries, the stakes are high. Mishandling sensitive information such as personally identifiable information (PII), financial records, or healthcare data can result in regulatory fines, reputational damage, and loss of customer trust. At the same time, businesses face mounting pressure to innovate with AI, creating a delicate balance between speed of adoption and risk management.

This is where Enkrypt AI delivers a competitive advantage. Built with a “Ship Fast, Ship Safe, Stay Ahead” philosophy, Enkrypt provides enterprises with a guardrails-first security platform designed to ensure that AI agents operate securely, responsibly, and in full compliance with organizational and regulatory requirements. By embedding policy enforcement, privacy protection, and real-time monitoring directly into AI workflows, Enkrypt empowers enterprises to adopt AI with confidence—knowing that risks are mitigated before they reach end users.

In this article, we’ll explore the building blocks of enterprise AI agents, the risks they introduce, and how Enkrypt AI’s platform—spanning guardrails, red teaming, policy violation detection, and PII protection—enables organizations to deploy secure and compliant agents at scale.

What Are Enterprise AI Agents, and Where Are They Used?

At their core, AI agents are autonomous or semi-autonomous systems designed to act on behalf of users or organizations. Unlike standalone language models that only generate text, agents can reason, take action, and integrate with enterprise systems, making them far more powerful—and far riskier—than simple chatbots.

For enterprises, these agents often fall into two broad categories:

- Conversational Agents – AI copilots and virtual assistants that interact directly with employees or customers through natural language. Examples include financial service agents helping customers understand loan options, or HR copilots guiding employees through internal policy questions.

- Workflow Agents – Systems embedded in back-office or operational workflows that automate complex, multi-step processes. These may include tax-preparation assistants pulling from sensitive IRS records, healthcare scheduling systems handling patient data, or mortgage agents validating compliance documents.

The adoption of AI agents across industries is accelerating because they improve efficiency, reduce costs, and enable new business models. However, their effectiveness often depends on access to sensitive data: financial histories, patient health information, or proprietary enterprise knowledge bases. This reliance creates unique security and compliance challenges—especially in highly regulated sectors like banking, insurance, and healthcare.

Without strong safeguards, AI agents can expose organizations to:

- Unauthorized data access or leaks of sensitive information.

- Outputs that violate internal policies or regulatory frameworks.

- Integrity issues such as biased decisions, toxic responses, or fabricated (“hallucinated”) results.

To mitigate these risks, enterprises must move beyond generic AI adoption and adopt privacy-first, guardrails-enabled architectures. With Enkrypt AI, businesses can ensure that every agent—whether customer-facing or operational—is protected by **policy enforcement, injection attack prevention, and PII redaction** at every step of the workflow.

The Core Building Blocks of an AI Agent

Modern enterprise AI agents are more than just large language models (LLMs). They combine multiple components—each with unique strengths and risks—that must be carefully secured to operate safely in production environments.

1. The Model

The model forms the reasoning and language foundation of an agent. Whether it’s OpenAI’s GPT, Anthropic’s Claude, or open-source options like LLaMA, the model determines how effectively an agent interprets input, generates responses, and handles complexity. For enterprises, models are often fine-tuned with proprietary data, which can introduce risks if sensitive or regulated data becomes embedded in the training process.

Enkrypt AI ensures that policy guardrails and data redaction tools protect models from learning or exposing sensitive data, while still allowing fine-tuning for domain-specific use cases.

2. Tools and Action Execution

Agents extend their functionality by connecting to external tools—databases, CRMs, APIs, calculators, search engines, and more. These integrations unlock enterprise value but also create critical risk surfaces. For example, a financial AI assistant accessing transaction histories may inadvertently expose PII if not properly governed.

Through injection attack detection and data masking, Enkrypt ensures that external tool usage remains secure, preventing adversaries from manipulating agents into retrieving or exposing sensitive data.

3. Memory and Reasoning Engine

To provide continuity and context, agents often store and reference past interactions or external knowledge bases. Memory systems—whether short-term or persistent—make agents more helpful but also increase the likelihood of leaking sensitive or outdated information. Similarly, reasoning frameworks like Chain-of-Thought and ReAct improve decision-making but can be manipulated by adversarial inputs.

Enkrypt AI integrates integrity guardrails to ensure reasoning remains factual, unbiased, and aligned with enterprise standards. By continuously monitoring memory use and reasoning chains, organizations gain visibility into agent decision-making, reducing the risks of hallucinations, bias, and toxic outputs.

Together, these three building blocks—model, tools, and memory—define the power of an enterprise AI agent. But without embedded guardrails, privacy controls, and monitoring, each building block also represents a potential attack vector. The next section examines the risk landscape enterprises face when deploying agents without adequate safeguards.

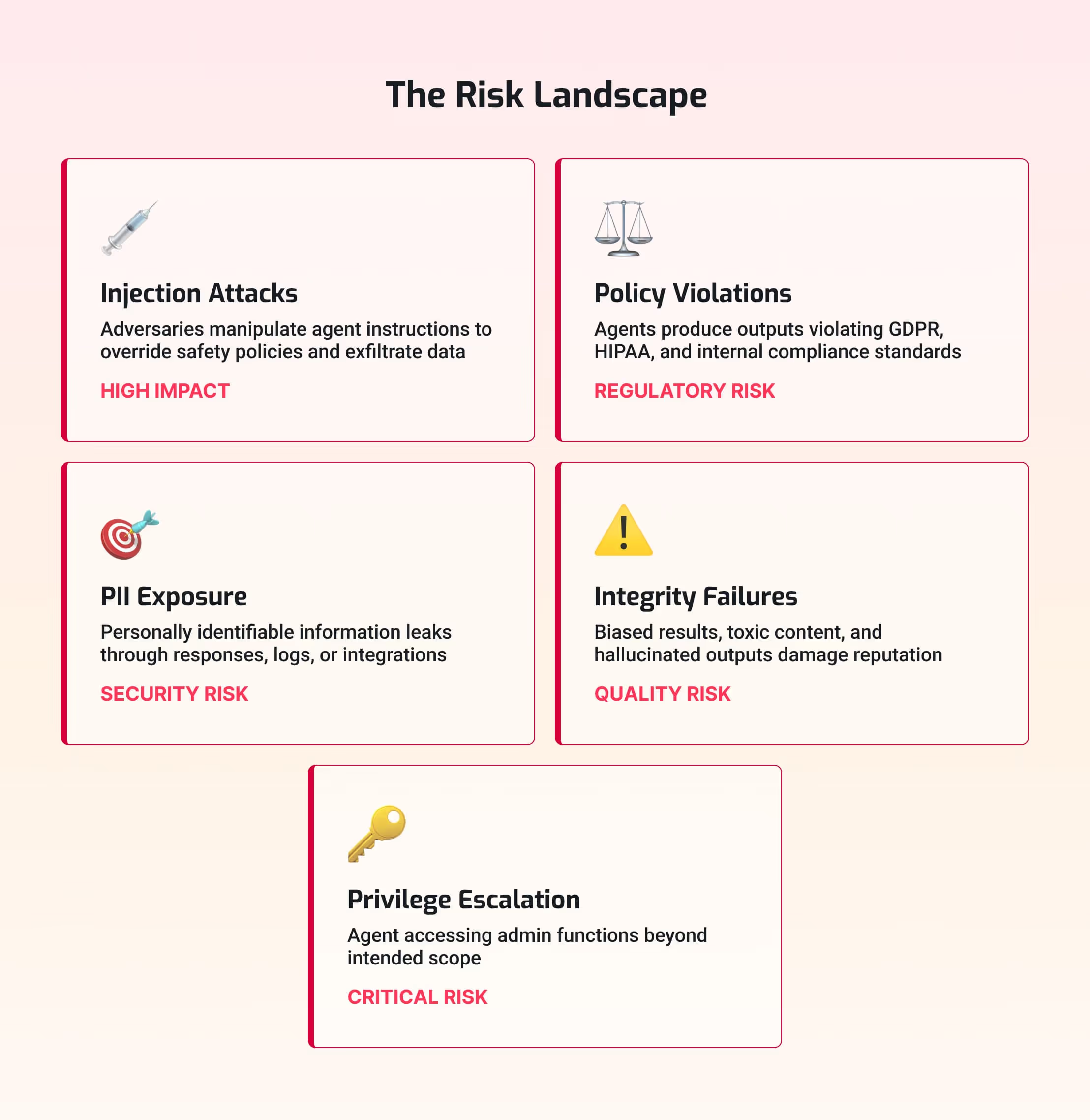

The Risk Landscape: Why Enterprises Need Guardrails

As enterprises adopt AI agents at scale, the risks extend far beyond technical glitches. These systems directly handle sensitive data, high-stakes decisions, and regulated workflows, making them attractive targets for adversaries and compliance auditors alike. Without a robust security framework, AI agents can introduce vulnerabilities that undermine both trust and regulatory standing.

1. Injection Attacks

Prompt injection is one of the most widespread and dangerous attack vectors in AI today. By subtly manipulating an agent’s instructions, adversaries can override safety policies, exfiltrate sensitive data, or coerce the agent into harmful actions. In regulated industries, even a single successful jailbreak can result in severe compliance violations.

- Example: A financial copilot tricked into revealing masked account details.

- Enkrypt AI defends against these risks through real-time injection attack detection and blocking.

2. Policy Violations

Enterprise agents must operate under strict policies—whether industry regulations like GDPR and HIPAA or internal compliance standards. Without guardrails, agents can produce outputs that directly violate these rules, from providing unlicensed tax advice to mishandling sensitive healthcare records.

- Enkrypt’s policy enforcement guardrails translate complex regulations into enforceable rules, ensuring agents remain compliant by design.

3. PII Exposure and Privacy Breaches

Agents often handle personally identifiable information (PII) such as names, SSNs, or transaction records. Left unchecked, this data can leak through responses, logs, or third-party integrations.

- Enkrypt provides PII detection and redaction that safeguards data in real-time, ensuring privacy and compliance with CCPA, GDPR, and sector-specific standards.

4. Integrity Failures (Bias, Toxicity, and Hallucinations)

Unreliable outputs are more than an inconvenience—they can damage brand reputation and create liability. Agents may generate:

- Biased results that disadvantage certain users.

- Toxic content that violates corporate standards.

- Hallucinated outputs that spread misinformation or lead to poor decisions.

- Enkrypt’s integrity guardrails detect and mitigate these issues, ensuring agent responses remain accurate, fair, and aligned with enterprise values.

5. Monitoring Gaps and Lack of Auditability

Without visibility, enterprises cannot prove compliance or detect emerging risks. Many AI deployments lack clear logging of who accessed what data, when, and why, leaving organizations exposed during audits or investigations.

- Enkrypt closes this gap with continuous monitoring and compliance dashboards, offering CISOs and risk teams the visibility needed for regulatory assurance.

The risks of unprotected AI agents are not theoretical—they’re already playing out in real-world enterprises. To protect sensitive data, maintain compliance, and build trust, organizations must adopt a guardrails-first approach that embeds security into every layer of the AI agent lifecycle.

How Enkrypt AI Secures Enterprise AI Agents

Securing AI agents requires more than patchwork filters or ad-hoc monitoring. Enterprises need an integrated security platform that embeds privacy, compliance, and integrity into every step of the AI lifecycle. Enkrypt AI delivers this through a modular suite of guardrails and monitoring capabilities, purpose-built for enterprise deployment.

1. Guardrails as the Trust Layer

Enkrypt’s Guardrails form the first line of defense, inspecting every prompt and response in real time. Whether the threat is an adversarial input, an unsafe output, or a compliance-sensitive request, guardrails ensure that only safe, policy-aligned interactions make it through to the end user. This trust layer protects enterprises without slowing down innovation.

2. Injection Attack Defense

AI agents are increasingly targeted by prompt injection and jailbreak attacks designed to override safety policies. Enkrypt provides Injection Attack Detection and Red Teaming that proactively identifies, blocks, and adapts to new adversarial tactics. By continuously testing systems against real-world attack vectors, Enkrypt ensures agents remain resilient as threats evolve.

3. Policy Violation Detection and Enforcement

Enterprises face complex, overlapping regulatory requirements—whether it’s tax compliance, financial disclosure, or healthcare privacy. Enkrypt translates these requirements into policy guardrails that automatically enforce rules at the model and workflow level. From preventing unlicensed tax advice to ensuring HIPAA-safe outputs, policy violations are caught before they reach the user.

4. Privacy and PII Protection

Agents that handle sensitive personal or financial data must protect privacy at all costs. Enkrypt’s PII Protection automatically detects and redacts personally identifiable information in prompts, responses, and tool integrations. Through masking, tokenization, and anonymization, enterprises ensure that data remains protected throughout the agent’s lifecycle—meeting GDPR, CCPA, and HIPAA standards by default.

5. Integrity Guardrails for Bias and Hallucinations

Trustworthy AI requires more than compliance—it requires integrity. Enkrypt’s Integrity Guardrails monitor agent reasoning and outputs to prevent:

- Bias that may disadvantage specific groups of users.

- Toxic content that violates corporate or regulatory standards.

- Hallucinations that mislead users with fabricated information.

- By safeguarding integrity, Enkrypt ensures agents deliver accurate, fair, and aligned results in every interaction.

6. Continuous Monitoring and Auditability

Visibility is essential for risk management. Enkrypt provides end-to-end monitoring and compliance dashboards that log every agent interaction, tool call, and data access. With full audit trails, enterprises can prove compliance, detect anomalies, and respond quickly to incidents—all while giving CISOs and compliance teams the assurance they need.

Together, these capabilities create a holistic security layer for enterprise AI agents. By embedding guardrails, privacy protection, and continuous monitoring directly into agent workflows, Enkrypt enables enterprises to innovate responsibly—building AI systems that are as compliant and secure as they are powerful.

Integrating Enkrypt AI into Enterprise Workflows

Security is only effective if it works with the systems enterprises already use. Enkrypt AI is designed for seamless integration, ensuring that security and compliance become an enabler—not a barrier—for AI adoption.

Built for Enterprise Tech Stacks

Enkrypt’s guardrails and monitoring can be deployed as:

- APIs – lightweight calls that secure prompts, responses, and data flows in real time.

- SDKs – enabling developers to embed guardrails directly into applications and services.

- Middleware – security layers that sit between models and applications, enforcing policy without requiring model retraining.

This flexibility means enterprises can secure agents without rearchitecting their workflows or introducing unnecessary latency.

Compatibility with Leading Frameworks

Enkrypt integrates with popular AI frameworks and platforms used in enterprise development, including:

- LangChain and LlamaIndex for retrieval-augmented generation (RAG) pipelines.

- Vertex AI on GCP, AWS Bedrock, and Azure OpenAI for cloud-native AI deployments.

- Custom enterprise stacks that connect agents to CRMs, ERPs, and knowledge bases.

By plugging directly into these ecosystems, Enkrypt enables organizations to adopt guardrails, PII protection, and policy enforcement without slowing down their AI roadmap.

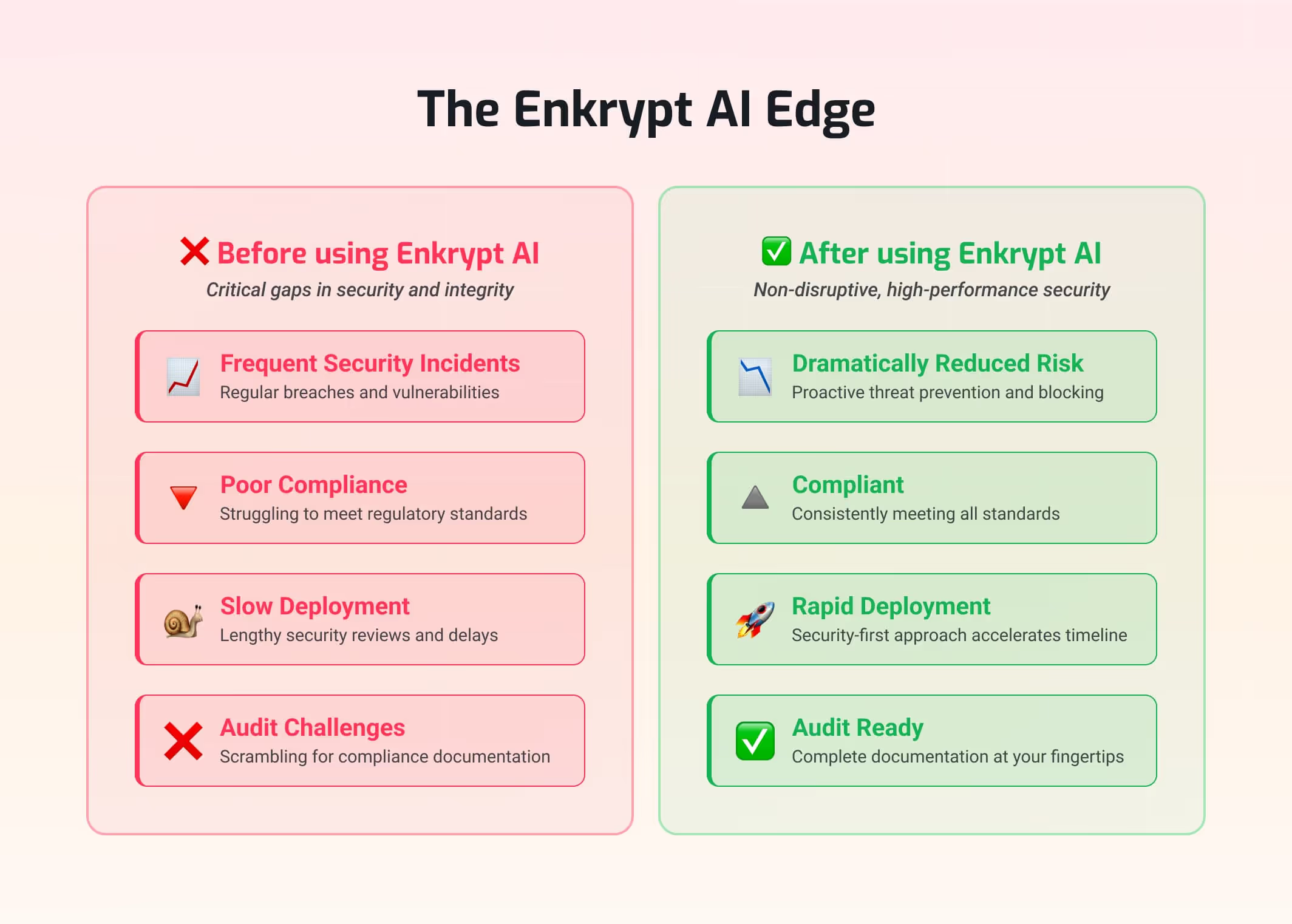

Non-Disruptive, High-Performance Security

Performance is critical when deploying customer-facing or workflow agents at scale. Enkrypt’s architecture is optimized to deliver sub-100ms latency, ensuring that security checks remain invisible to the end user. Enterprises can deploy injection attack defenses, privacy filters, and monitoring without sacrificing responsiveness or user experience.

With Enkrypt AI, security and compliance are no longer afterthoughts bolted onto AI projects. Instead, they become embedded capabilities that allow enterprises to scale AI adoption confidently—knowing that every agent, workflow, and integration is protected from day one.

The Strategic Advantage of Secure and Compliant Agents

For many organizations, AI security and compliance are framed as cost centers—necessary to satisfy auditors, regulators, or risk committees. In reality, building secure and compliant AI agents is a strategic advantage that drives trust, accelerates adoption, and differentiates enterprises in competitive markets.

Trust as a Differentiator

Customers, employees, and regulators are increasingly aware of the risks posed by AI. Organizations that can demonstrate proactive safeguards—such as guardrails, PII protection, and policy enforcement—position themselves as trustworthy leaders. In sectors like healthcare, finance, and education, where data privacy is non-negotiable, this trust is a key driver of customer loyalty and retention.

Faster, Safer Adoption

Without robust controls, enterprises often slow or halt AI deployments due to compliance concerns. By embedding real-time security checks and compliance guardrails, Enkrypt AI removes friction, enabling organizations to move from proof-of-concept to production faster. This reduces time-to-value while ensuring that innovation does not come at the expense of risk.

Reducing Reputational and Regulatory Risk

The cost of a data breach, regulatory fine, or public trust incident can far outweigh the investment in proactive AI security. By securing agents against injection attacks, hallucinations, and privacy violations, Enkrypt helps enterprises mitigate high-stakes risks before they impact operations or brand reputation.

The CISO’s Shield for Enterprise AI

Enkrypt AI serves as the CISO’s shield, equipping security leaders with visibility, compliance dashboards, and end-to-end monitoring. This not only simplifies regulatory audits but also empowers CISOs to confidently support AI adoption across business units—bridging the gap between innovation and risk management.

In short, secure and compliant AI agents are not just safer—they are smarter for business. Organizations that prioritize security and compliance with Enkrypt AI gain a first-mover advantage, delivering innovative AI experiences with the trust and resilience enterprises demand.

Conclusion: Ship Fast, Ship Safe, Stay Ahead

AI agents are no longer experimental—they are becoming mission-critical components of enterprise workflows. From financial copilots to healthcare scheduling systems, these agents handle sensitive data and make decisions that directly impact customers, operations, and regulatory obligations. Without the right safeguards, the risks—data breaches, compliance failures, and reputational harm—can outweigh the benefits.

The solution is clear: enterprises must adopt a privacy-first, guardrails-enabled architecture that embeds security and compliance from the start. With Enkrypt AI, organizations gain a unified platform that combines:

- Guardrails to enforce policies in real time.

- Injection attack prevention to block adversarial inputs.

- Policy violation detection to ensure regulatory compliance.

- PII redaction and detection to safeguard sensitive data.

- Integrity guardrails to address bias, hallucinations, and toxic outputs.

- Continuous monitoring and auditability to give CISOs confidence and visibility.

Incorporating these capabilities isn’t just a compliance exercise—it’s a strategy for building trustworthy, enterprise-grade AI agents. Organizations that embed security at the core of their AI systems will not only protect sensitive data but also build the trust, speed, and resilience needed to thrive in an AI-driven economy.

Enkrypt AI enables you to ship fast, ship safe, and stay ahead—so you can innovate with confidence, knowing your AI agents are secure, compliant, and ready for the enterprise.

%20(1).png)

.jpg)