America’s AI Action Plan: Racing to Stay Ahead

The race for artificial intelligence dominance is heating up, and the United States just made its biggest move yet. In July 2025, the Trump Administration released “Winning the AI Race: America’s AI Action Plan,” a bold strategy to keep America at the forefront of AI development.

This isn’t just another government report gathering dust on a shelf. The plan outlines over 90 specific actions the federal government will take in the coming months. As officials put it, winning the AI race will create “a new golden age of human flourishing, economic competitiveness, and national security.”

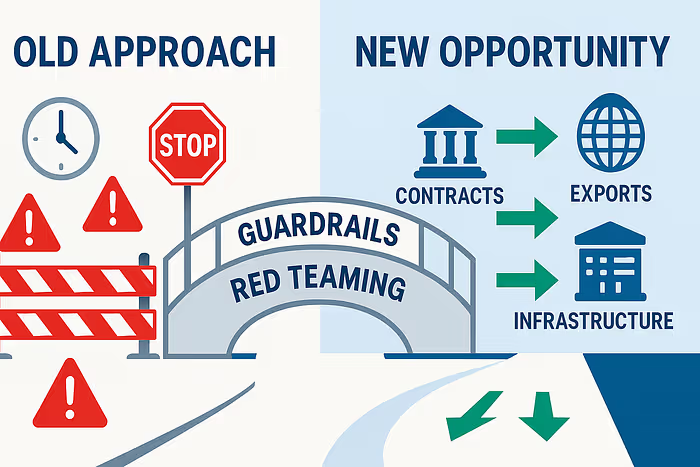

The plan marks a clear shift from the previous administration’s approach. Instead of focusing heavily on AI regulation and safety concerns, this strategy prioritizes speed, innovation, and beating the competition.

The Three Pillars of American AI Dominance

The AI Action Plan is built on three foundational pillars that work together to establish comprehensive American leadership in the AI ecosystem. Each pillar addresses critical aspects of AI development, from fostering innovation to building necessary infrastructure and maintaining global influence.

Pillar One: Accelerating Innovation

The first pillar focuses on removing barriers that currently hinder American AI innovation. The plan emphasizes “removing onerous Federal regulations that hinder AI development and deployment, and seek private sector input on rules to remove.” This represents a marked departure from previous approaches that emphasized extensive regulatory oversight.

The administration’s strategy recognizes that excessive bureaucratic hurdles can stifle the rapid pace of AI development. By streamlining regulatory processes and actively soliciting industry feedback, the government aims to create an environment where American companies can innovate without being bogged down by unnecessary red tape.

A crucial component of this pillar involves ensuring ideological neutrality in AI systems, particularly those used by the federal government. The plan includes “updating Federal procurement guidelines to ensure that the government only contracts with frontier large language model developers who ensure that their systems are objective and free from top-down ideological bias.” This requirement could significantly impact how AI companies design and train their models for government contracts.

Pillar Two: Building American AI Infrastructure

The second pillar addresses one of the most pressing challenges in AI development: the need for massive computational infrastructure. The plan recognizes that AI supremacy requires not just brilliant algorithms and innovative companies, but also the physical infrastructure to support large-scale AI development and deployment.

The infrastructure component includes “promoting rapid buildout of data centers: expediting and modernizing permits for data centers and semiconductor fabs, as well as creating new national initiatives to increase high-demand occupations like electricians and HVAC technicians.” This comprehensive approach acknowledges that building AI infrastructure isn’t just about technology, it’s about creating an entire ecosystem of supporting industries and skilled workers.

The focus on data centers is particularly significant given the enormous computational requirements of modern AI systems. Training and running large language models and other AI applications requires massive amounts of computing power, which in turn demands extensive data center capacity. The semiconductor component is equally crucial, as advanced chips are the backbone of AI computation.

Pillar Three: Leading in International Diplomacy and Security

The third pillar recognizes that AI leadership isn’t just about domestic capabilities, it’s about shaping global AI development and ensuring that American values and interests are reflected in the international AI ecosystem. This includes “exporting American AI” through partnerships between the Commerce and State Departments and industry “to deliver secure, full-stack AI export packages, including hardware, models, software, applications, and standards, to America’s friends and allies around the world.”

This comprehensive export strategy goes beyond simply selling AI products. It involves creating complete AI ecosystems that include not just the technology itself, but the supporting infrastructure, standards, and governance frameworks. By providing allies with full-stack AI solutions, the United States can help ensure that global AI development follows American-led standards and principles.

The security implications of this pillar cannot be overstated. As AI becomes increasingly important for national defense, economic competitiveness, and social governance, countries that control AI development and deployment will have significant advantages. By actively promoting American AI solutions globally, the plan aims to maintain American influence in an increasingly multipolar technological world.

A Different Philosophy

The contrast with previous approaches is stark. The Biden administration focused heavily on AI safety, ethics, and comprehensive regulation through executive orders and federal guidelines. The Trump administration is betting that America’s biggest risk isn’t AI misuse, but falling behind competitors like China.

This shift shows up throughout the plan. Where previous policies emphasized caution and extensive oversight, the new approach prioritizes speed and market leadership. The assumption is that American companies, when unleashed, will naturally develop AI systems that are both powerful and responsible.

Real-World Challenges

Implementing this ambitious plan won’t be easy. Coordinating over 90 policy actions across multiple government agencies requires unprecedented cooperation. Success depends heavily on execution, not just good intentions.

The deregulation emphasis also creates potential tensions. Critics worry that reducing oversight could lead to AI systems that cause harm or perpetuate unfair biases. The administration’s bet is that market forces and international competition will drive responsible development better than government regulations.

International partnerships face their own hurdles. While the plan envisions extensive AI exports to allies, other countries are developing their own capabilities. Building these partnerships requires offering terms that benefit everyone involved, not just the United States.

What’s Missing from the Picture

While the AI Action Plan presents an ambitious vision, several critical details remain unclear. The plan lacks specific timelines for its 90+ policy actions, budget allocations, and clear agency responsibilities for implementation. There’s limited discussion of how this strategy responds to China’s specific AI initiatives or how it aligns with international frameworks like the EU’s AI Act. The plan also doesn’t define what constitutes “ideological bias” in AI systems or address cybersecurity implications of rapid infrastructure expansion. Missing stakeholder perspectives include industry reactions, academic viewpoints, and concerns from privacy advocates about reduced oversight.

Perhaps most significantly, the plan doesn’t thoroughly address potential risks and unintended consequences. Environmental impacts of massive data center expansion, monopolization risks in the AI industry, and potential job displacement effects receive little attention. The economic analysis lacks specific investment figures and sector-by-sector impact assessments. Implementation challenges such as legal obstacles to deregulation, state versus federal jurisdiction issues, and how this interacts with existing privacy laws are largely unaddressed. These gaps suggest that while the strategic direction is clear, the practical details of execution and risk mitigation still need substantial development.

What This Means for Your Company

If you’re a compliance officer at a company using or planning to use AI, this action plan creates both opportunities and new considerations for your organization.

Reduced Regulatory Burden

The plan’s emphasis on removing “onerous Federal regulations” suggests a lighter regulatory environment ahead. This could mean fewer compliance requirements and faster approval processes for AI implementations. However, this doesn’t mean a free-for-all. Companies should still maintain strong internal governance frameworks, as regulatory pendulums can swing back.

New Procurement Requirements

If your company sells AI services to the federal government, pay close attention to the new requirement for “objective and free from top-down ideological bias” systems. This will likely mean:

- Enhanced documentation of your AI training processes

- Regular bias testing and reporting

- Clear policies on how your AI systems handle controversial topics

- Potential third-party audits of your AI outputs

Export Opportunities and Compliance

The plan’s focus on exporting American AI globally creates new business opportunities, especially for companies developing “full-stack” AI solutions. However, this comes with export compliance considerations:

- Understanding which AI technologies fall under export control regulations

- Ensuring proper licensing for international AI deployments

- Managing data sovereignty requirements in different countries

- Navigating varying international AI regulations

Infrastructure Investment Implications

The accelerated data center and semiconductor facility development could impact your AI strategy:

- Potentially lower costs for cloud computing and AI services as capacity increases

- More reliable domestic supply chains for AI hardware

- Faster deployment timelines for AI projects due to improved infrastructure

Preparing for the Shift

Smart compliance teams should:

- Review current AI governance policies to ensure they remain relevant in a lighter regulatory environment

- Develop processes for documenting AI system objectivity and bias mitigation

- Stay informed about evolving export regulations for AI technologies

- Consider how international AI partnerships might affect compliance obligations

The key message for compliance officers: while the regulatory environment may become more permissive, maintaining strong internal AI governance will be more important than ever. Companies that can demonstrate responsible AI practices will be better positioned for government contracts and international partnerships.

How Enkrypt AI Fits Into This New Landscape

As the AI Action Plan reshapes the regulatory environment, companies face a unique challenge: how to move fast while maintaining the responsible AI practices that will differentiate them in government contracts and global markets. This is where Enkrypt AI becomes essential for organizations looking to capitalize on the opportunities created by the plan.

With the government’s new emphasis on “objective and free from top-down ideological bias” AI systems, companies need robust tools to detect and mitigate bias throughout their AI development lifecycle. Enkrypt AI’s platform automatically identifies and addresses risks like hallucinations, privacy leaks, and potential misuse that could disqualify companies from lucrative federal contracts. Our industry-specific red teaming capabilities help organizations thoroughly test their AI systems against the objectivity standards that government procurement will now require.

The plan’s focus on rapid infrastructure development and reduced regulatory barriers means companies will deploy AI faster than ever. However, this speed can’t come at the cost of safety or compliance. Enkrypt AI’s real-time guardrails and continuous monitoring ensure that as companies scale their AI operations to take advantage of improved infrastructure and streamlined regulations, they maintain the security and responsibility standards that will be crucial for success in both domestic and international markets.

For companies eyeing the export opportunities outlined in the plan’s third pillar, Enkrypt AI provides the compliance foundation needed to navigate varying international standards while maintaining the “secure, full-stack AI” approach the government wants to promote globally. Our platform, backed by global standards like OWASP, NIST, and MITRE, gives organizations the confidence to scale AI safely across borders while staying in control of risks that could jeopardize valuable partnerships with allies.

The Big Picture

The AI Action Plan represents the most comprehensive federal approach to AI strategy in American history, recognizing that AI is a foundational capability that will shape economic, military, and social power for decades. The administration is betting that America’s traditional strengths : entrepreneurial innovation, infrastructure development capabilities, and strong alliance networks, will prove decisive in the AI age. However, success requires more than just removing regulatory barriers and building data centers. Companies must balance the speed and innovation that the plan enables with the responsible AI practices that will distinguish winners in government contracts, international partnerships, and domestic markets.

The companies that will thrive in this new environment are those that can demonstrate objective, secure AI systems while moving at unprecedented speed. This is where platforms like Enkrypt AI become game-changers. By automatically detecting risks, ensuring compliance with evolving standards, and maintaining the objectivity that government contracts now require, Enkrypt AI enables organizations to capitalize on every opportunity the Action Plan creates. As the AI race intensifies and the stakes continue to rise, having the right tools to scale AI safely isn’t just an advantage; it’s essential for American companies ready to lead the world into the AI age.

.avif)

%20(1).png)

.jpg)