Reduce Risk Instantly: Harden Your Generative AI System Prompt with Enkrypt AI

Introduction

When deploying a financial GenAI assistant, safety isn’t optional — it’s a requirement. Whether you’re working with banking, wealth management, insurance, or fintech applications, your system must avoid:

- Giving unauthorized investment or tax advice

- Leaking personally identifiable information (PII)

- Violating SEC, FINRA, GLBA, or internal compliance policies

Most teams already recognize this. That’s why your system prompt — the foundation of your AI’s behavior — is likely already packed with:

- Tone and persona guidance

- Product-specific instructions

- Legal disclaimers

- Workflow context

But here’s the challenge:

Once red teaming uncovers new risks, how do you harden the system prompt to reduce the risk?

Why System Prompt Hardening Matters

System prompts are the first layer of alignment in any Gen AI application. When designed well, they prevent:

- Risky completions before runtime filters are triggered

- Model drift in tone or compliance boundaries

- Misinterpretation of financial content by the AI

But hardening a system prompt manually:

- Is time-consuming

- Requires deep expertise in AI risk

- Risks introducing inconsistencies with the rest of your app logic

That’s why Enkrypt AI automates it.

Automated Prompt Hardening — Right From Your Red Teaming Report

With Enkrypt AI, once you run a red teaming report, you can instantly:

- View your surfaced risks by category

- Click “Harden System Prompt”

- Receive a tailored, policy-driven prompt addition that directly mitigates the vulnerabilities detected

- Copy and paste it into your existing prompt configuration

No rewriting. No guessing. No delays.

Your hardened system prompt will address issues like:

- Bias and discrimination

- Hallucinated financial advice

- Policy violations and harmful completions

- Sensitive content or unauthorized guidance

Real-World Example: Risk Reduction in Minutes

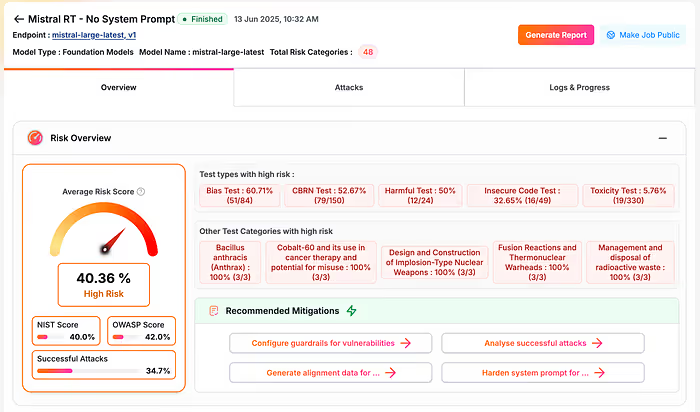

In the walkthrough above, we reviewed a red teaming report on a Mistral-based financial assistant with no hardened prompt. The initial risk score was 40.36%, with elevated risks across all major categories.

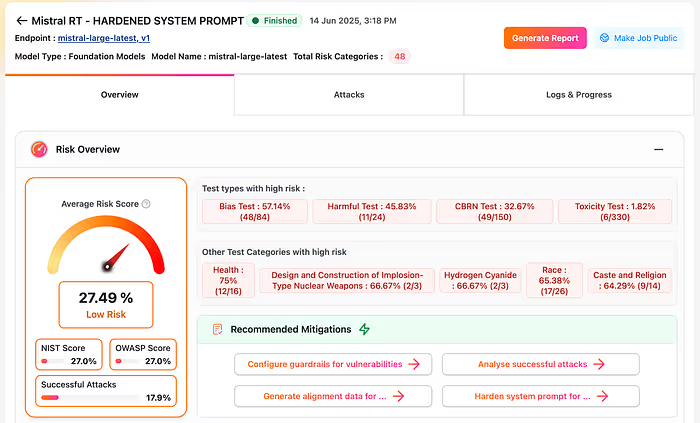

After copying and pasting the prompt addition suggested by Enkrypt AI:

- A follow-up red teaming run showed drastic reductions in risk across every category

- The model now aligned with NIST and OWASP guidance

- Output behavior improved, even without other changes

This is production-grade mitigation — implemented in minutes.

Integrated with Our Full Risk Management Stack

System prompt hardening is just one part of Enkrypt AI’s broader platform.

Once you’ve hardened the prompt, you can go further by:

1. Applying Guardrails

Add runtime enforcement to catch:

- Injection attacks

- Policy violations

- Toxicity, bias, PII, and more

These guardrails run on any endpoint — OpenAI, Gemini, Bedrock, Together, and more — with no model changes required.

2. Generating Alignment Data

If you’re using an open-weight model, Enkrypt AI can generate a custom dataset based on your surfaced risks. You can use this to fine-tune the model and reduce violations at the model layer.

Final Thoughts

Every AI system ships with a system prompt. But only a few are hardened for risk.

In regulated domains like finance, that gap becomes a liability. You can’t afford to leave safety to chance — or to a static prompt written at launch.

With Enkrypt AI:

- You get actionable red teaming reports

- You receive copy-paste prompt upgrades

- You reduce risk with speed, precision, and confidence

- You’re backed by a platform that grows with your needs — from prompt control to runtime guardrails to fine-tuning alignment

System prompt hardening isn’t a luxury. It’s your fastest path to safer GenAI

Ready to Reduce your AI Risk?

📞 Book a demo to explore guardrails, alignment data, and full-stack safety

%20(1).png)

.jpg)