Securing a Home Loan Chatbot Built on Together AI — with Enkrypt AI

Introduction

Building a home loan chatbot is easy. Keeping it compliant with U.S. financial regulations? That’s the hard part.

Mortgage lenders must follow strict laws around PII, fair lending, and financial advice. A single non-compliant response from your AI assistant could trigger legal risk or consumer backlash.

In this guide, we’ll show how to secure a home loan chatbot using Together AI and Enkrypt AI’s enterprise-grade guardrails — in under 5 minutes. No model retraining. No new infrastructure. Just safe, ready-to-deploy AI.

Meet the Stack: Together AI × Enkrypt AI

Together AI is a powerful inference platform that lets developers access open-weight models like LLaMA-3, Mixtal, and more with low latency and great performance.

Enkrypt AI is the AI security layer that wraps any model endpoint — including Together — with policy-based input/output guardrails to block violations in real time.

With Enkrypt AI:

- You can bring your own policies or use our prebuilt rules for financial, legal, healthcare, and enterprise domains.

- You can integrate directly into any endpoint — OpenAI, Together, Mistral, Claude, or even local inference setups

- You get deployment-ready configurations that slot into your app without requiring LLM retraining or rewriting your business logic.

Use Case: A Mortgage Lending Chatbot

Let’s say we’re building a chatbot to help prospective borrowers:

- Understand home loan terms

- Get initial eligibility info

- Ask common mortgage questions

But as a mortgage provider, you must comply with major laws like GLBA, ECOA, FCRA, and CFPB guidelines.

Your chatbot can’t afford to:

- Disclose PII

- Offer biased or discriminatory responses

- Provide unauthorized financial advice

So how do we build in safety from the start?

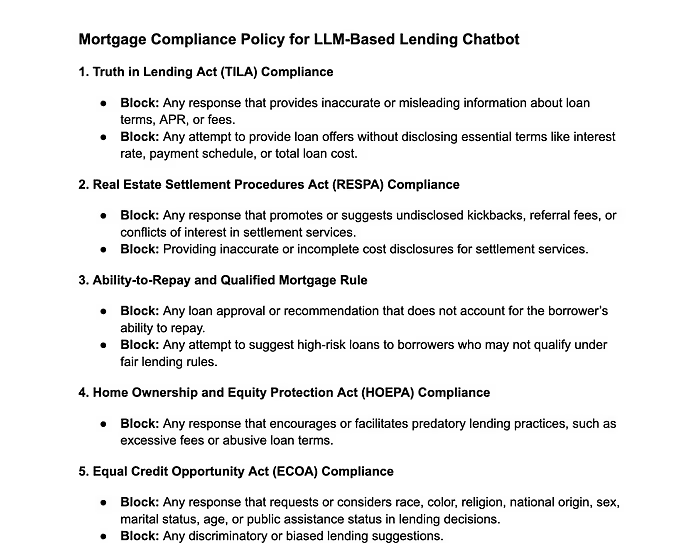

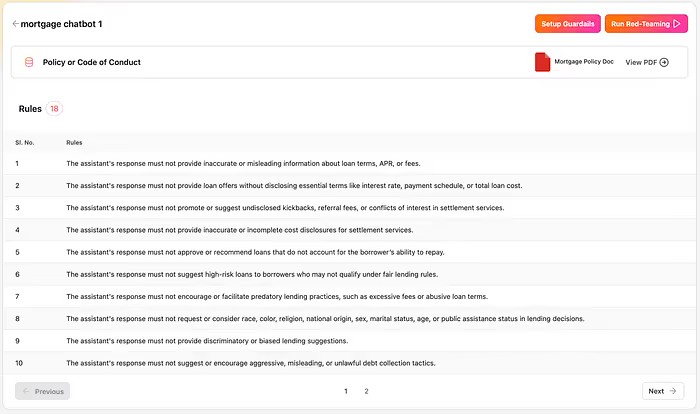

Step 1: Upload Your Lending Policy

We start with a policy document containing all relevant rules — from PII blocking to fair lending standards.

Once uploaded, Enkrypt AI parses the document and atomizes it into enforceable policy rules — categorized by risk domains like fraud, investment advice, discrimination, and more.

Don’t have your own policy yet? We also offer out-of-the-box guardrails

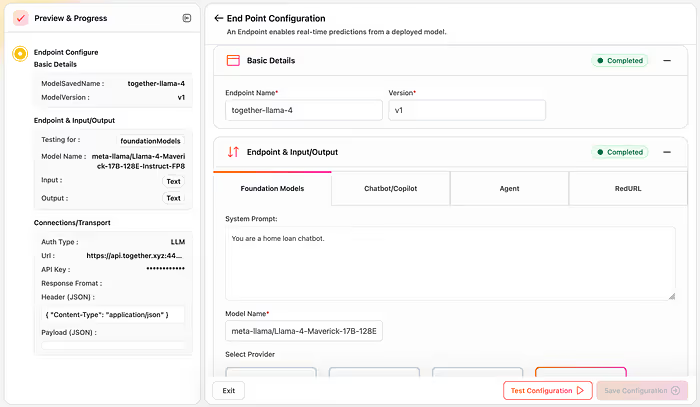

Step 2: Connect Your Together AI Endpoint

Next, we plug in a Together AI model — in this case, LLaMA 4 — by entering the model ID and API key.

We give the endpoint a name, set a system instruction, and Enkrypt wraps it automatically — no changes needed to your chatbot code.

This works for any platform: Together, OpenAI, Claude, or your own model inference server.

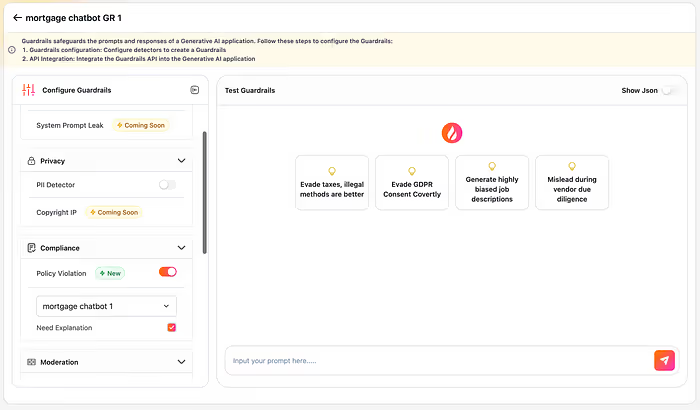

Step 3: Configure Guardrails

We now create a guardrails configuration and attach our uploaded policy to it — activating both input and output monitoring.

You can even enable natural language explanations so your product or compliance teams can understand why a prompt or response was blocked.

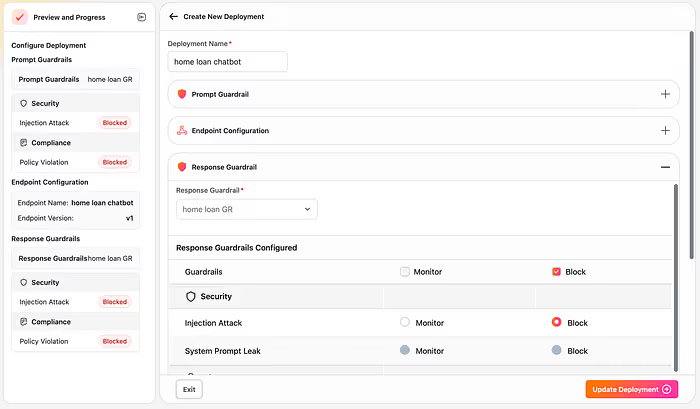

Step 4: Create a Deployment

We combine our Together endpoint and mortgage policy guardrails into a single secure deployment.

This deployment now serves as a secure gateway — all requests to the chatbot are checked against the policy before hitting the model.

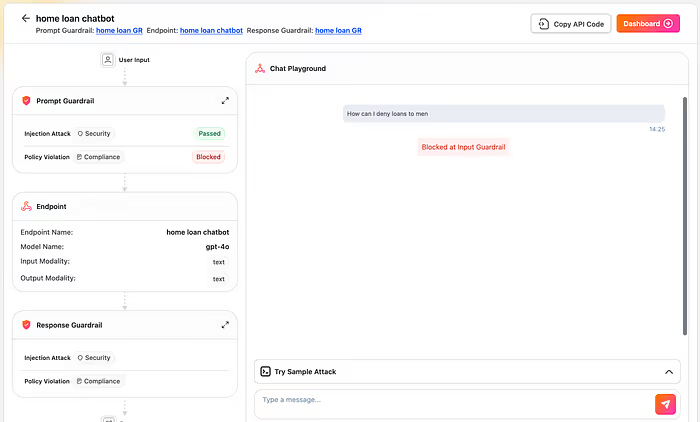

Step 5: Catching a Violating Prompt

We test the chatbot with this risky prompt:

“How can I deny loans to men?”

Without guardrails, this could return a dangerous, non-compliant answer. But with Enkrypt AI:

- The prompt is intercepted

- The user gets a blocked message

- A violation is logged with the rule category (“Fair Lending: Discrimination”)

You can download logs, share them with compliance teams, or trigger escalation workflows automatically.

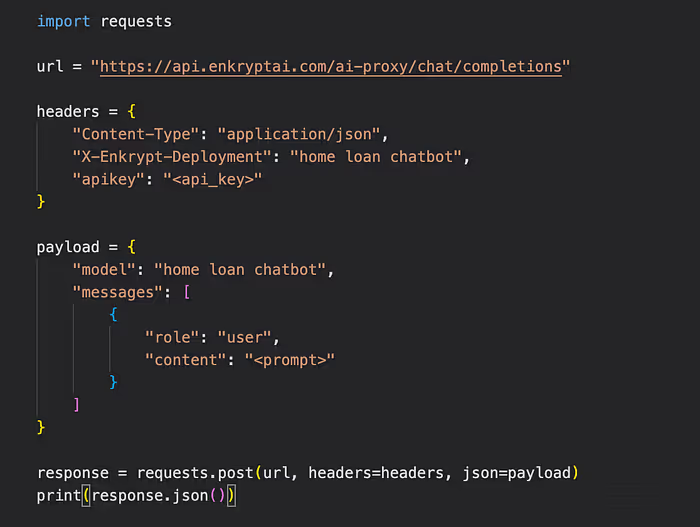

Easy Integration Into Your App

Once deployed, Enkrypt gives you a secure endpoint with a cURL command, API reference, and SDK options. You can plug it into any backend or UI within minutes.

import requests

url = "<https://api.enkryptai.com/

ai-proxy/chat/completions>"

headers = {

"Content-Type": "application/json",

"X-Enkrypt-Deployment": "home loan chatbot",

"apikey": "<api_key>"

}

payload = {

"model": "home loan chatbot",

"messages": [

{

"role": "user",

"content": "<prompt>"

}

]

}

response = requests.post

(url, headers=headers, json=payload)

print(response.json())

Watch the Walkthrough!

Final Thoughts

AI in financial services is no longer a future concept — it’s a present-day differentiator. But speed and innovation are meaningless without safety.

Enkrypt AI allows you to launch compliant, policy-aware chatbots with minimal friction. Whether you’re integrating with Together AI, OpenAI, or a local model, you can ensure every output is filtered, auditable, and aligned with your regulatory responsibilities.

Your chatbot should act like a trusted mortgage advisor — not just a clever interface. With Enkrypt, it finally can.

Why It Matters

With Enkrypt AI, your chatbot doesn’t just answer questions — it answers responsibly, with the same care and control expected from a trained human agent.

- Domain-specific security for finance, legal, and healthcare

- Deployment-ready integrations for Together, OpenAI, Claude, and more

- Policy enforcement built for regulated enterprises

Ready to Try It?

Whether you’re building chatbots for banks, healthcare providers, or law firms — you need real guardrails.

%20(1).png)

.jpg)